Throughout the many clients I’ve served over the years, I’ve found most websites have at least two or three areas of opportunity that are easy and quick to implement. Among these are some of the most vital areas of SEO, ensuring your website is available, crawlable, and shows up on search engines like Google. Like ensuring you have a published sitemap.xml, referenced in the robots.txt file, and submitted to Google Search Console, which I’ll walk you through in this article. This is particularly important if you have a lot of pages on your website. These are also some of the more accessible opportunities that are easier to implement.

As a general concept, I like to keep in mind that websites are for people. Search engines crawl these websites and offer them up for people. Sometimes, we forget the context of the individual and end up over-focusing on the technology—especially when covering the more technical aspects of SEO. So now, let’s take a look at some of the practical quick wins of SEO.

What You’ll Learn

- Quick Wins for SEO: Discover low-hanging opportunities to improve your site’s SEO performance with minimal effort.

- How to Configure and Optimize Your Sitemap.xml: Learn to ensure your sitemap is live, accurate, and correctly submitted to search engines on the popular website management platforms.

- Using Google Search Console for SEO Hygiene: Understand how GSC helps monitor your site’s performance, submit sitemaps, and identify crawl issues.

- Best Practices for Configuring Your Robots.txt File: Properly reference your sitemap for better crawling.

- Enhancing H1 Tags for Clarity and SEO Impact: Find out how to write clear, effective H1s that improve both search visibility and people engagement.

- Identifying and Resolving 404 Errors: Use tools like Google Analytics and Screaming Frog to detect and fix broken pages on your site.

- How to Implement Redirects the Right Way: Learn to set up 301 redirects correctly to maintain SEO value and ensure smooth user navigation across platforms.

Sitemap.xml Essentials and Configuration

Think of the sitemap.xml as a roadmap for search engines. It tells them what content exists on your site and what should be indexed. A sitemap ensures everything you want crawled and indexed is available to people on search engines like Google. You’ll submit it to tools like Google Search Console (more on this later) to make sure search engines know how to access your content.

Best practices for sitemaps boil down to a few key points:

- Ensure your sitemap is live and published

- Only include pages that load without issues

- Make sure the pages listed are ones you want to appear in search engine results

What about AI? Even with Generative AI-powered search engines, a sitemap remains essential for effective crawling. AI is changing search capabilities, but there’s not a change in the fundamentals of structured data at this point. AI still relies on data input and sitemaps are one of those inputs.

Creating and Configuring Your Sitemap

In many cases, your website will already have a dynamic sitemap that updates automatically. Platforms like Squarespace and Shopify publish sitemaps by default. However, with some CMS platforms, you may need to activate and verify the sitemap yourself. For example, WordPress users can use the Yoast SEO plugin to generate one easily.

Here’s how to check if your sitemap is published:

- Visit: https://[yourwebsite]/sitemap.xml

- If this redirects to /sitemap-index.xml, that’s perfectly fine!

- Example from Squarespace: https://www.guineydesign.com/sitemap.xml

If the sitemap loads and displays in a coded format, great! That means it’s live. If you see a “page not found” message or something similar, you’ll need to configure your sitemap. Here’s how to generate or activate the sitemap on popular website platforms:

- WordPress: Use the Yoast SEO plugin to create a sitemap.

- Squarespace: Automatically created and published (Learn more).

- Shopify: Automatically generated and published (Learn more).

- HubSpot CMS Hub: View and edit your sitemap here.

- Wix: Automatically created if you complete the SEO setup checklist.

- Drupal: Use the Simple XML Sitemap module to configure your sitemap.

- Sitecore: Configure the sitemap here.

Once you’ve verified that your sitemap is active, it’s time to follow a few best practices to keep it optimized.

Best Practices for Sitemap.xml

Structuring your sitemap correctly ensures that search engines can efficiently crawl your site and improve your visibility in search results. Keep it clean by following these tips:

- Use Full URLs: Each entry should include the full URL (e.g.,

https://www.example.com/shop) rather than just the path (/shop). - Only Include Live Pages: All URLs should lead to published pages that load without errors. You can:

- Resolve 404 (Not Found) or 500 (Server Error) pages.

- Remove or update any 301/302 redirects listed in the sitemap.

- Remove ‘noindex’ Pages: Avoid including pages with ‘noindex’ directives in your sitemap, as they tell search engines not to index them. Use Google Search Console or Screaming Frog to identify and remove these pages.

Utilizing Search Engine Console

Once you’ve created a sitemap, the next step is to submit it to Google via Google Search Console (GSC). Think of GSC as a surveillance tool for your website’s SEO health, specifically for Google. It provides insights into your site’s performance and highlights potential issues that might be holding you back in search results.

As for other search engines like Bing and DuckDuckGo, the SEO hygiene you perform for Google will usually benefit them as well. If you’re curious or want to explore further data, you can sign up for Bing Webmaster Tools. It’s optional, but I personally enjoy knowing which search engines are crawling and indexing my content. Each tool offers unique features that might benefit you, but if you’re pressed for time, focusing on GSC alone will do just fine.

Setting Up and Navigating Search Console

If you haven’t set up Google Search Console yet, head over to https://search.google.com/search-console and follow the instructions to validate your site. Once that’s done, take some time to explore the various features. Pay special attention to these key sections to track performance and catch issues early:

- Performance Reports (Search Results, Discover):

This section shows which search queries are bringing people to your site. Track key metrics like clicks, impressions, and your average ranking position. - Indexing Reports (Pages, Sitemaps):

Find out which pages Google has indexed and identify any issues. Use the Sitemaps report to submit your sitemap (see the next section) and monitor for errors. - Enhancements:

This section highlights areas like mobile usability and AMP (Accelerated Mobile Pages) improvements. It’s crucial to ensure your site works smoothly across devices. - Security Issues:

If Google detects any security threats on your site, you’ll find them here. Address these issues as soon as possible to protect both your visitors and your search rankings.

Submitting Your Sitemap to Google Search Console

Submitting your sitemap helps Google understand which pages to prioritize when crawling your site.

- Log into Google Search Console and navigate to the Sitemaps section under “Indexing”.

- Enter your sitemap URL without the domain (e.g.,

sitemap.xmlorsitemap_index.xml) and click ‘Submit’.

Essentials of robots.txt Configuration

The robots.txt file controls how search engines access your site. Crawlers read this simple text file to understand which areas of your site should be crawled and which should be ignored. SEO best practices include referencing your sitemap within the robots.txt file to ensure search engines can find it easily. However, incorrect configuration can affect your site’s visibility, so it’s important to proceed with care. Don’t go overboard tweaking it if you’re unsure of what to allow or disallow—I’ve seen this happen time and time again!

Understanding Robots.txt Basics

The robots.txt file gives search engines instructions on what they should or should not access. You can find it at:

- https://[yourwebsite]/robots.txt

- Example (WordPress): https://rankbeast.com/robots.txt

In most cases, your website manager will generate a default robots.txt file automatically. But remember: search engines might not always follow the instructions if they believe ignoring them improves user experience. Think of robots.txt as a “do not enter” sign for search engines—it’s a suggestion, not a command. A basic WordPress configuration looks like this:

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

Sitemap: https://rankbeast.com/sitemap_index.xml

Be careful not to block search engines from accessing important pages. If your site is already being indexed and showing up in search results, it’s often best to leave the robots.txt file as is. I’ve seen people accidentally block their entire site just by making small changes to the file—so tread carefully.

Adding a Sitemap Reference to Your Robots.txt File

Notice the sitemap reference in the example above?

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

Sitemap: https://rankbeast.com/sitemap_index.xml

Including your sitemap’s location in the robots.txt file makes it easier for search engines to find and crawl it early. This helps search engines understand your site’s architecture, especially for large websites. While it’s not essential for small sites, adding the sitemap reference can still be helpful.

Here’s how to edit and add the sitemap reference to your robots.txt file on different platforms:

- WordPress – Use the Yoast SEO plugin: Edit the robots.txt file through Yoast. Note that Yoast may automatically add the sitemap reference for you.

- Squarespace: Automatically configured—no manual changes needed.

- Shopify: Managed automatically—no need to adjust it.

- HubSpot CMS Hub: See the “Use robots.txt files” section.

- Wix: How to edit your site’s robots.txt file.

- Drupal: Learn where to replace the robots.txt file in Drupal.

- Sitecore: Configure your robots.txt file in Sitecore.

Enhancing H1 Tags for Better Clarity

Webpage content is organized hierarchically within the HTML, using headings and paragraphs to structure information. Titles, subtitles, sections, and subsections are all considered headings. These headings follow a clear hierarchy:

- H1: The top heading, often the title of the page or article.

- H2: Subheadings that organize major sections under the H1.

- H3: Subheadings nested within H2s, and so on.

Since the H1 is often the first thing both people and search engines see, it plays an important role in conveying the core message of the page. H1s help people and search engines understand the content at a high level. Well-written H1s are simple, direct, and specific to the page’s topic, offering quick SEO benefits when optimized effectively.

What about AI? It’s more important than ever to ensure your H1 reflects what people are looking for. AI systems analyze both the content of your page and search behavior. So, it’s important to have Clear, intent-driven headings to help AI match content with the right queries

Crafting Informative and Concise H1s

If people can’t quickly determine what the page is about from the H1, they are less likely to stay and engage. Confusing H1s at the top of the page can deter individuals from investing their time in your content. A clear, well-structured H1 sets expectations and guides both humans and search engines.

While keywords are important, they shouldn’t dominate the H1. The focus should be on the broader topics and themes that resonate with your audience. Writing naturally for people is always the best approach. Keywords should flow into the H1 organically without sounding forced.

Your H1 should reflect the core message of the page and provide a clear preview of the content. It can follow simple formats like:

- “[WHAT] Services” – Example: “SEO Consulting Services”

- “[WHAT] Products” – Example: “Eco-Friendly Office Supplies”

- “[What] Services [WHERE]” – Example: “Plumbing Services in Austin”

Here are a few key practices to follow when writing effective H1 tags:

- Keep H1s simple and clear: The H1 should convey exactly what the page is about in just a few words.

- Use one H1 per page: Each page should have one clear H1 tag to avoid confusing both people and search engines. Search engines can distinguish between H1s based on size and placement, but it’s best practice to use only one H1 per page. Avoid duplicate H1s across multiple pages.

- Align the H1 with page content: Make sure the H1 accurately reflects the main topic or service covered on the page. A misleading or poorly aligned H1 will cause people to leave quickly.

- Incorporate keywords naturally: Use relevant keywords in the H1 where appropriate, but ensure the phrasing is natural and easy to read. The goal is to make the content readable for people first.

- Keep it short and concise: Aim for H1s to be around 60 characters or fewer. This length ensures readability on both the page and in search results.

- Avoid keyword stuffing: Stuffing H1s with keywords is outdated and ineffective. Search engines and humans prioritize clarity and quality content over excessive keyword use. Focus on creating meaningful, well-phrased H1s.

Identifying and Resolving 404 Errors

A 404 Not Found error occurs when someone tries to access a page on your website that no longer exists. Think of 404s like potholes on a road—they disrupt the user experience and prevent smooth navigation. These errors stop both visitors and search engine crawlers in their tracks. From a visitor’s perspective, it’s frustrating. They expect to land on the page they were looking for, only to see a “Page not found” message. This can happen for several reasons: the page may have been deleted, the link is broken, or the URL was mistyped. If people can’t find what they need, they’ll likely leave and search elsewhere.

Additionally, too many 404 errors send negative signals to search engines. This can impact how your site ranks in search engine results. Fixing 404s is a quick and effective way to improve your website’s SEO. Regularly monitoring and resolving errors like these is essential for maintaining good website hygiene.

Using Tools to Find 404 Errors

Identifying 404 errors is easy if you know which tools to use. Many tools offer free versions with some limitations, but they’re still effective for finding broken links, deleted pages, and incorrect URLs. Regularly scanning your site for errors ensures that your website stays in good health. Here are some tools I’ve found helpful:

- Google Search Console: Use the “Coverage” section to find 404 errors and other crawl issues. You’ll see a list of broken URLs that need fixing.

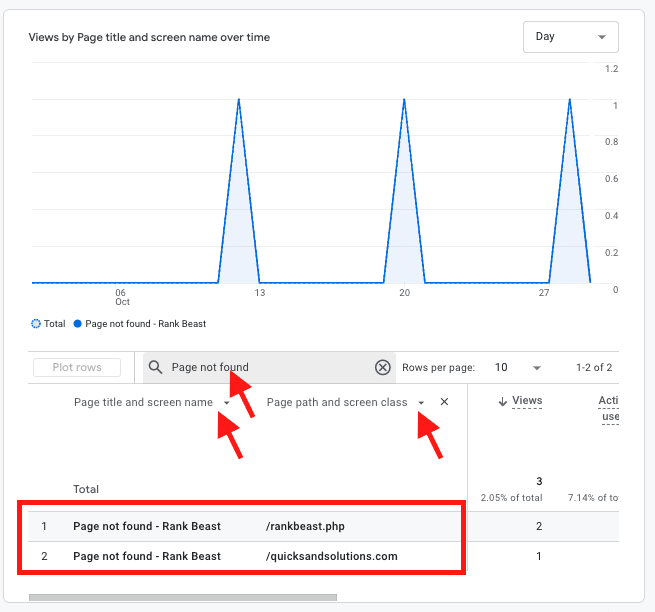

- Google Analytics: To find 404 pages, create a random invalid page (e.g.,

https://[yourwebsite]/randopageurlfornotfound) and note the page title (e.g., “Page not found”). In GA4, go to Reports > Engagement > Pages and screens, filter by “Page title”, and search for the 404 title to identify broken URLs causing this error.

- Screaming Frog SEO Spider: This powerful crawler scans websites for errors, including 404s. The free version lets you crawl up to 500 pages—perfect for smaller sites.

- Ahrefs Site Audit: A premium tool with free capabilities when connected to Google Search Console. It helps identify 404 errors while also providing insights into site health and SEO performance.

- SEMrush Site Audit: SEMrush offers a comprehensive set of SEO tools, including crawlers that find 404 errors and other site health insights. Useful for larger websites with more complex SEO needs.

Implementing Redirects Correctly

Once you’ve identified 404 errors, the next step is to set up 301 redirects. A 301 redirect tells search engines and people that the original page has been moved permanently, ensuring SEO value transfers to the new destination. Follow these steps to implement redirects effectively:

- Select a relevant page: Redirect people to a page that closely matches the original content. For example, if a blog post has been removed, redirect it to a similar post—not just the main blog page.

- Set up the redirect: Use the appropriate tools or plugins to create the 301 redirect.

- Test the redirect: Verify that the redirect works as expected. You can use the same tools mentioned above (e.g., Screaming Frog) to confirm it’s functioning correctly.

While redirects are essential, using too many can negatively impact your site. Redirect chains (multiple redirects linking one after another) can slow down page loading times and confuse crawlers. Aim to resolve 404 errors at their source by updating internal links instead of relying solely on redirects. Good website hygiene means identifying and fixing broken links proactively, rather than constantly adding new redirects.

Here are platform-specific suggestions for setting up redirects:

- WordPress: Use a plugin like Redirection to manage redirects. It also tracks 404s and simplifies redirect management compared to using .htaccess files.

- Squarespace: Add redirects via Settings > Developer Tools > URL Mapping. Enter the old and new URLs following the provided syntax to activate the 301 redirect.

- Shopify: Navigate to Online Store > Navigation > View URL Redirects and enter the old and new paths. Simple as that!

- HubSpot CMS Hub: Create and manage redirects via Settings > Website > Domains & URLs > URL Redirects. Add both the old and new URLs to create a 301 redirect. Learn more.

- Wix: Add redirects using the Settings > SEO Dashboard > URL Redirect Manager. Learn more here.

- Drupal: Install the Redirect module to manage redirects directly within the backend.

- Sitecore: Use the content editor to create a redirect within the platform.

Wrapping It Up: Staying on Top of SEO Basics

SEO doesn’t have to be overwhelming. It’s often the small, simple things—like optimizing H1 tags, setting up redirects, and maintaining a clean sitemap—that have the most immediate impact. By focusing on these low-hanging fruits, you’re already making strides toward better visibility and user experience.

The key is consistency. Regularly checking for 404 errors, monitoring Search Console, and keeping an eye on redirects will ensure your site stays in good health. SEO isn’t a “set it and forget it” kind of task—it’s more like tending a garden. A little attention here and there goes a long way, and before you know it, you’ll start seeing the results. As long as you also have solid content.

So, as you move forward, remember: Keep things clear, keep things simple, and most importantly, keep your audience in mind. Search engines follow the breadcrumbs we leave behind, but it’s the people who matter most. Optimize for them, and you’ll find that SEO success naturally follows.

What are common low-hanging fruit in SEO?

What are quick SEO wins for beginners?

Quick SEO wins include:

- Implementing 301 redirects correctly.

- Ensuring your sitemap.xml is live and submitted to search engines.

- Properly configuring your robots.txt file.

- Optimizing H1 tags for clarity.

- Identifying and resolving 404 errors.

What is a sitemap.xml, and why is it important for SEO?

A sitemap.xml is a file that lists all the pages on your website, serving as a roadmap for search engines. It ensures that search engines can find and index your content effectively, which is crucial for your site’s visibility in search results.

How can I check if my sitemap.xml is active?

To verify your sitemap:

- Visit

https://[yourwebsite]/sitemap.xml. - If it loads correctly, your sitemap is active.

- If you encounter a “page not found” error, you may need to generate or activate your sitemap using your website’s CMS or a plugin.

How do I submit my sitemap to Google Search Console?

To submit your sitemap:

- Log in to Google Search Console.

- Select your website property.

- Navigate to the “Sitemaps” section.

- Enter your sitemap URL (e.g.,

/sitemap.xml) and click “Submit.”

What is the role of the robots.txt file in SEO?

The robots.txt file instructs search engine crawlers on which pages to crawl or avoid. Properly configuring this file ensures that search engines can access the pages you want indexed while preventing them from accessing sensitive or irrelevant content.

How can I identify and fix 404 errors on my website?

Use tools like Google Analytics or Screaming Frog to detect 404 errors. Once identified, you can fix these errors by restoring the missing pages or setting up 301 redirects to guide users and search engines to the correct pages.

What is a 301 redirect, and why is it important?

A 301 redirect is a permanent redirection from one URL to another. It’s essential for maintaining SEO value when a page’s URL changes, ensuring that both users and search engines are directed to the correct page without losing ranking authority.