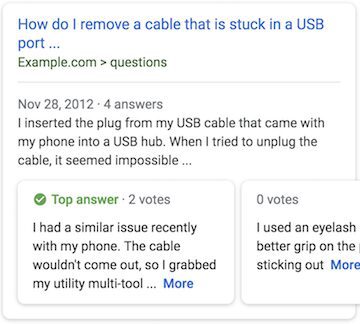

I’ve observed a decline in organic search over the past decade. Search engines, notably Google, have introduced features like People Also Ask, lists, paragraphs, and video snippets that push naturally ranking website listing further down the page. Search engine optimization professionals (SEOs) coined the term “Zero-Click Searches” to describe how users get what they need without visiting the websites businesses have worked hard to create and maintain. In response, SEOs adapted by optimizing content to feature in these coveted spots, aligning with Google’s ideal ranking signals.

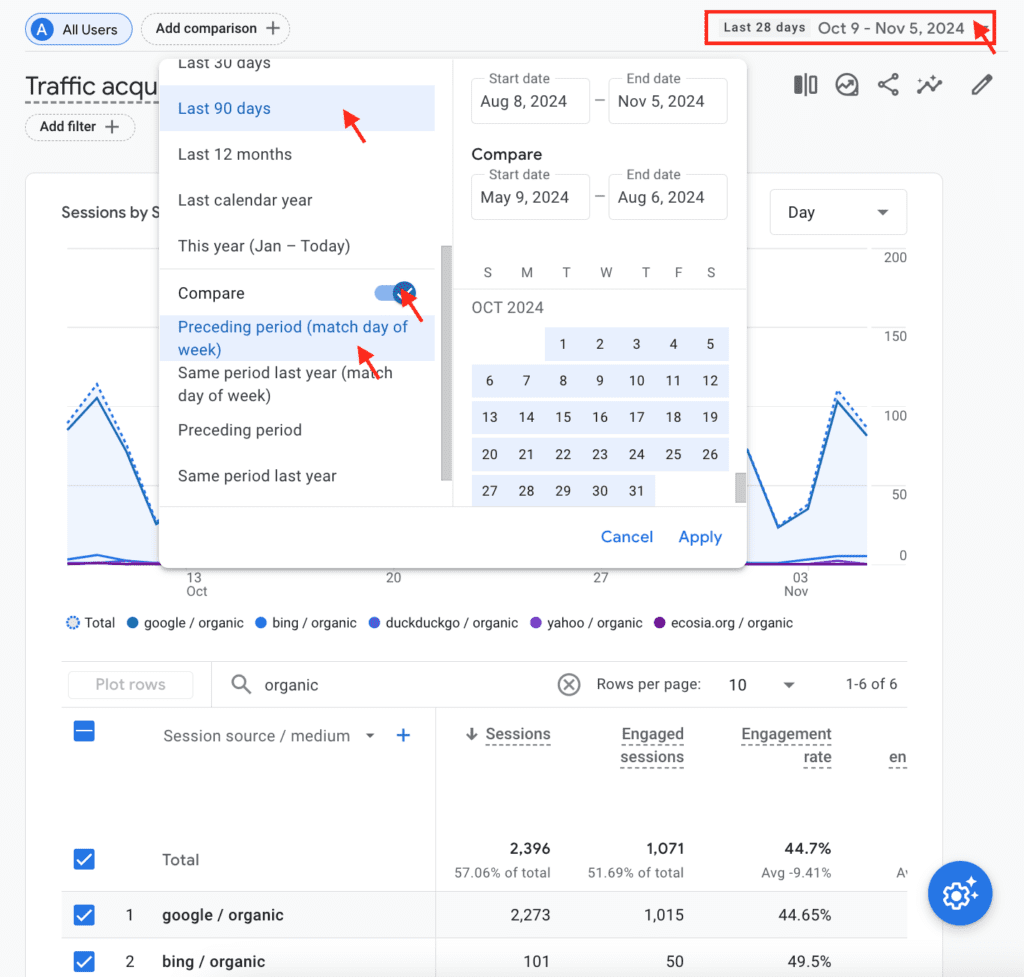

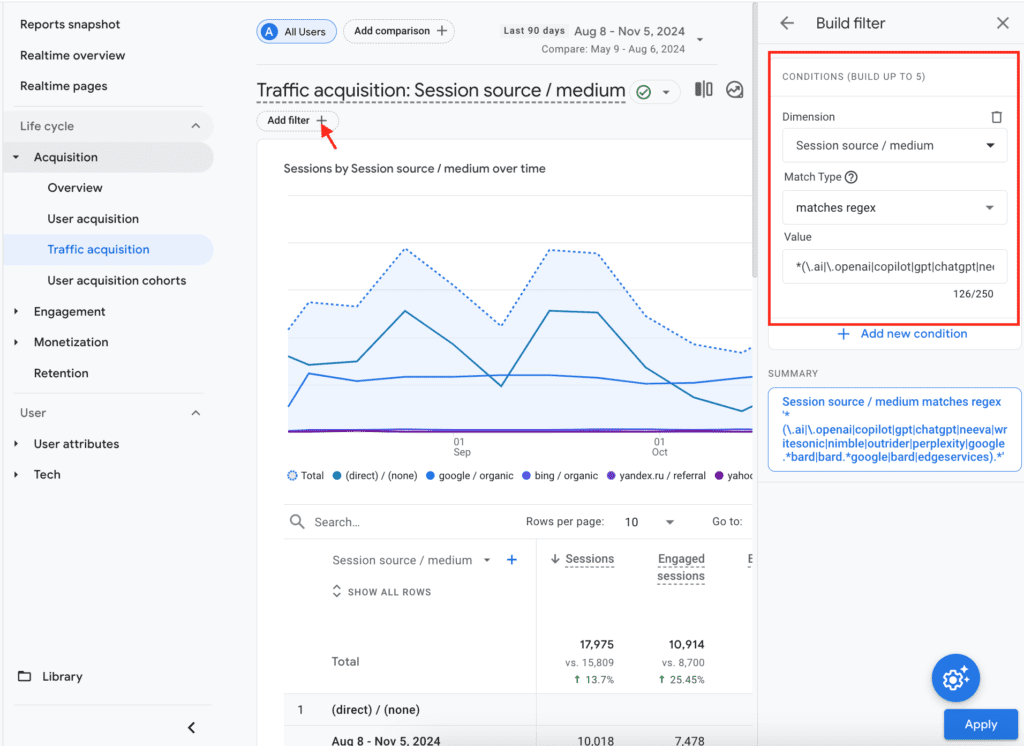

However, Google has made numerous updates, including many core algorithm adjustments in recent years, another core algorithm update rolled out this month (November 2024). This volatility raises questions about whether search engines are providing the best results for people and if the generated traffic maintains the high quality we once expected. For instance, a customer’s concept of brand authority may differ from Google’s understanding, especially regarding smaller, niche websites. This creates a discrepancy in what’s actually available to people when we’re over-reliant on search engines.

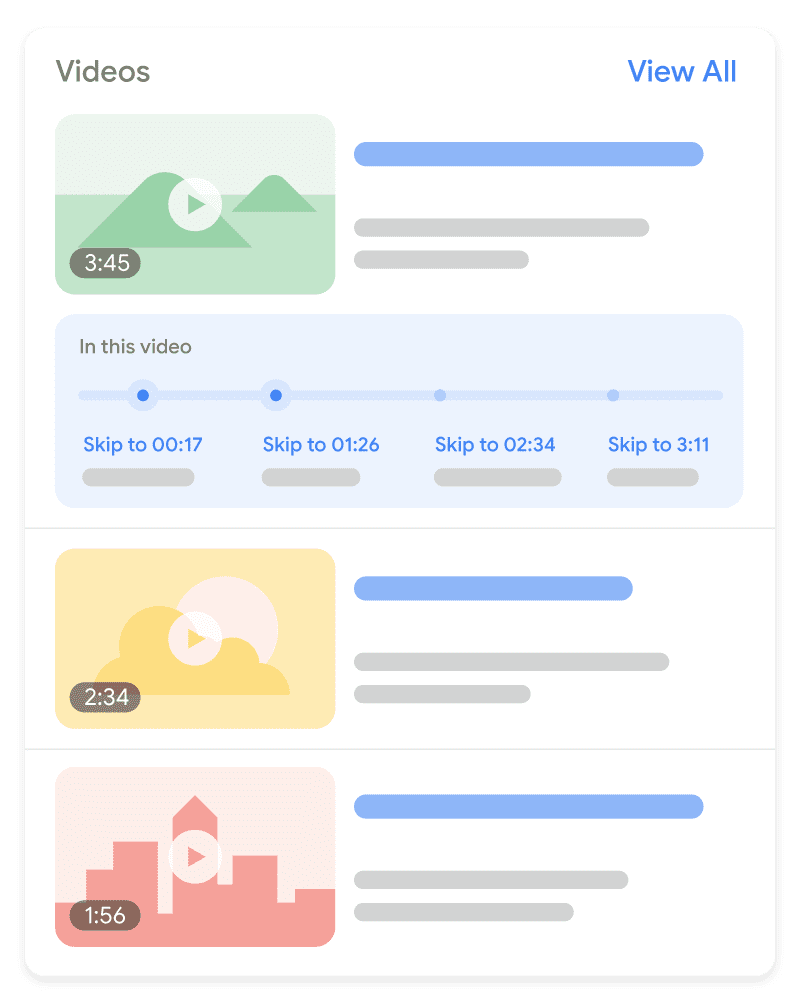

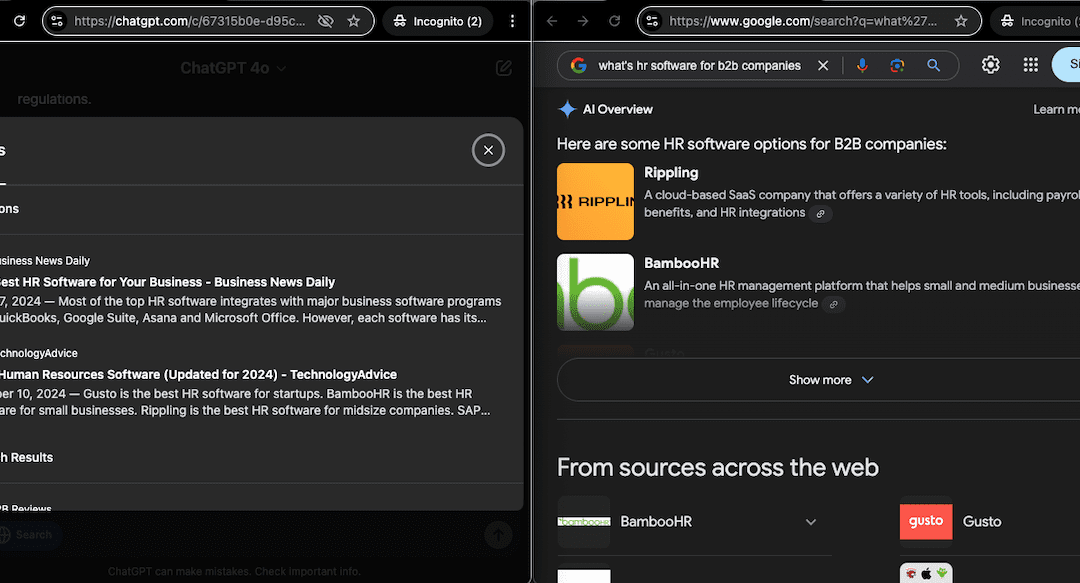

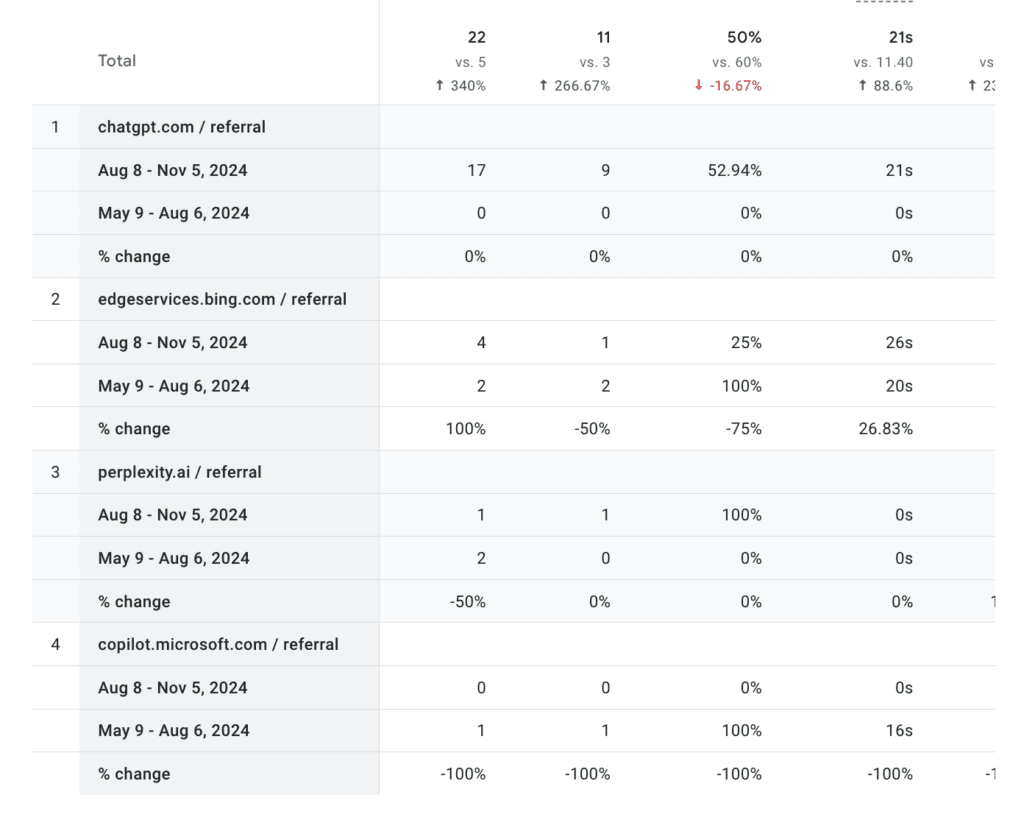

Now, we’re living in an AI revolution that’s fundamentally changing how people and businesses interact with digital technologies. In mid-May 2024, Google introduced AI Overviews (AIO), which occupy the very top of search results, the most valuable real estate on results pages. Even pushing ads further down. Utilizing their AI model, Gemini, AI Overviews provides generative responses on the fly, offering high-level information taken from various sources, including your websites. If AI cites a website, people often need to click on a sources link within the generated response, making search generated traffic to your site less likely.

Now, Google has integrated advertising into AI Overviews as well. These ads appear underneath AI Overviews as typical shopping ads in the generative result with a “Sponsored” label, pushing other search features and organic results further down the page to places where people don’t go as often.

Most people don’t scroll past a certain point if they do scroll at all. Another general rule is:

If an action needs more input from a person, the less likely they’ll interact with it or see it.

People want things fast, immediately, without having to do much more than they already did to get to where they are.

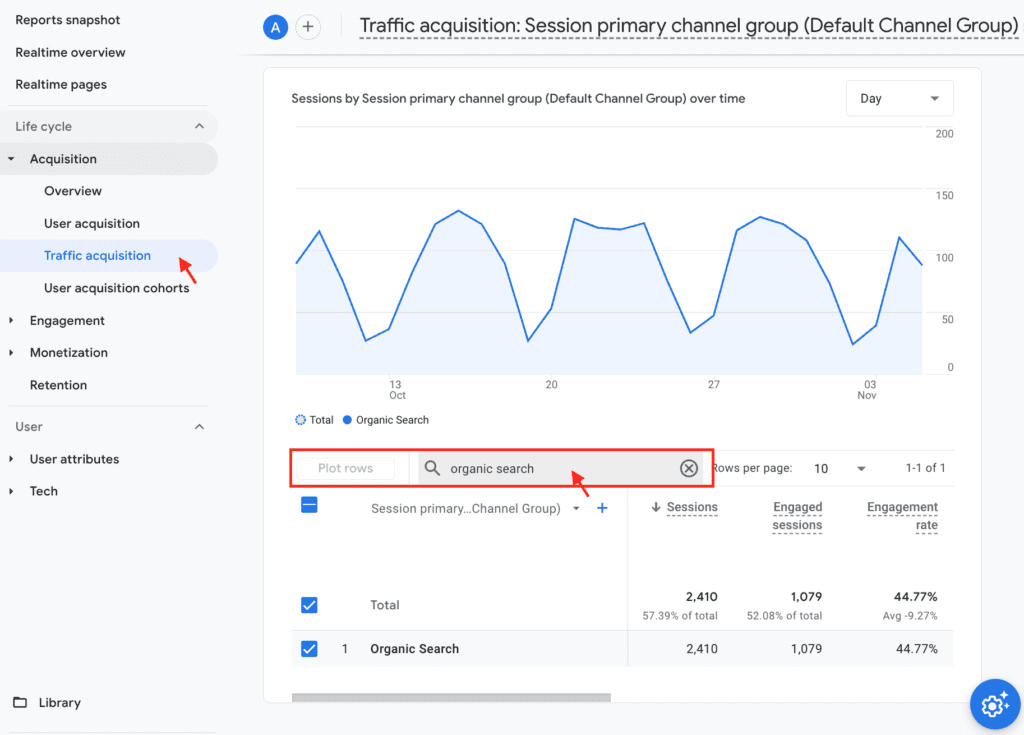

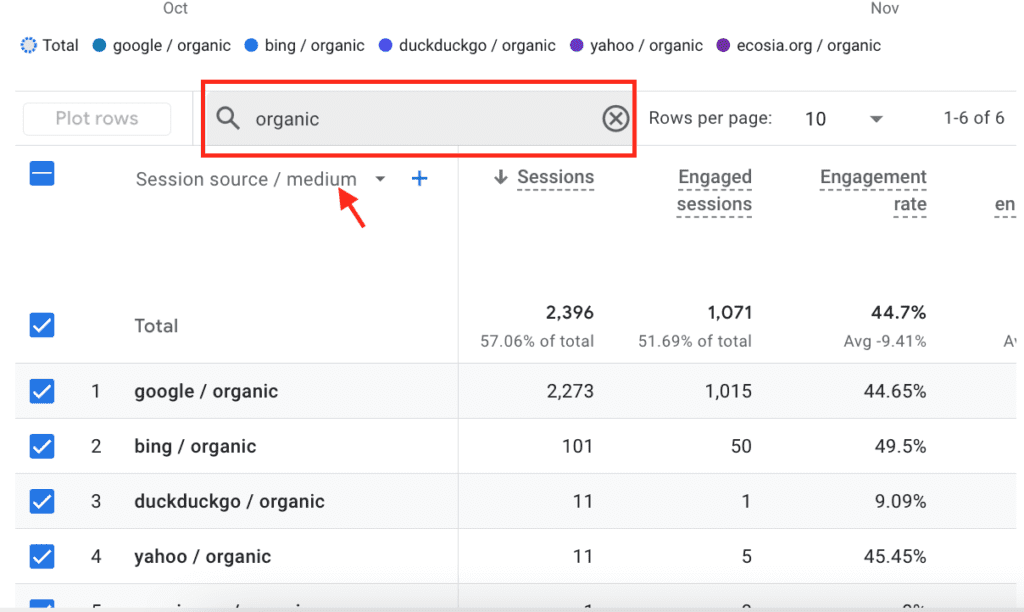

The Recent Declines of Organic Traffic

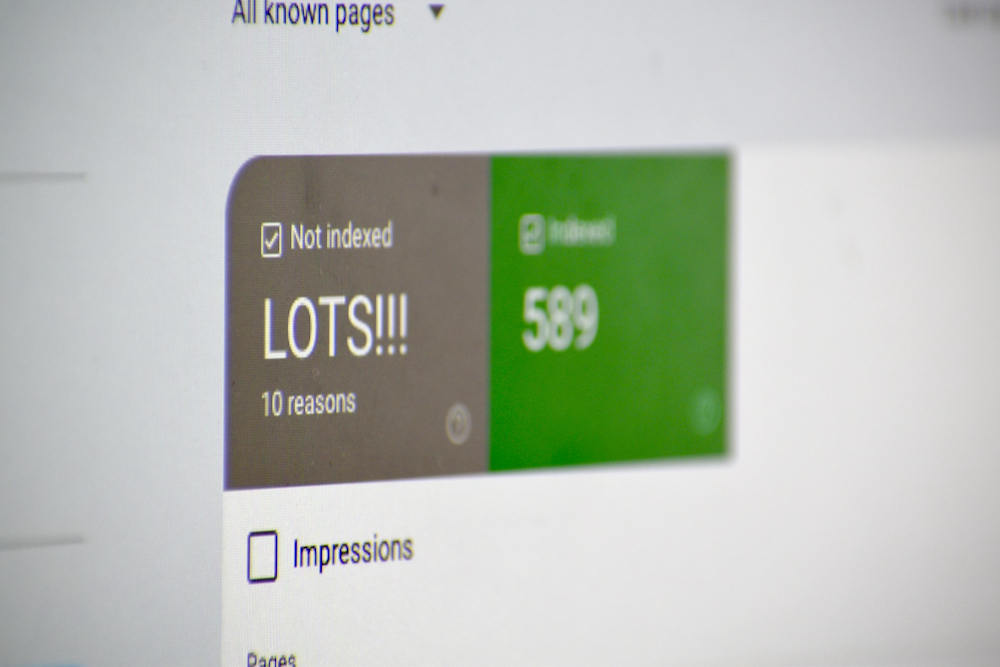

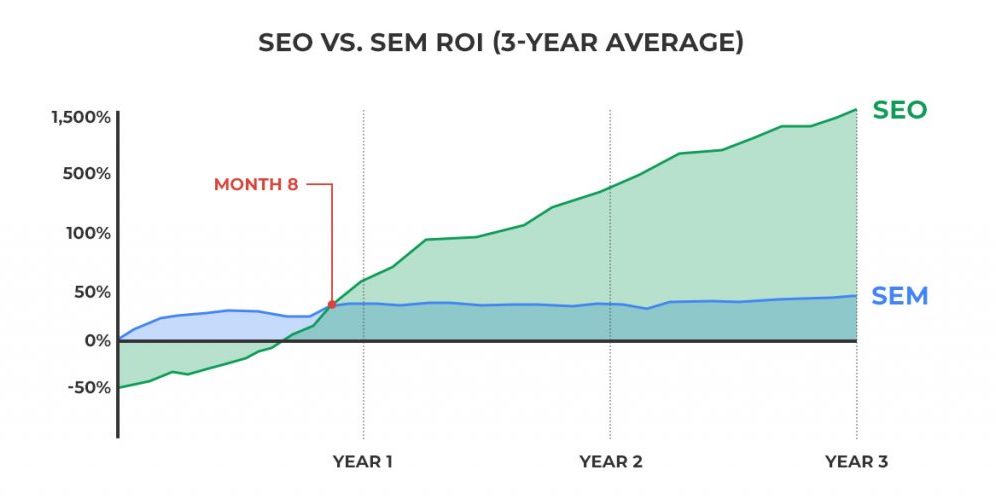

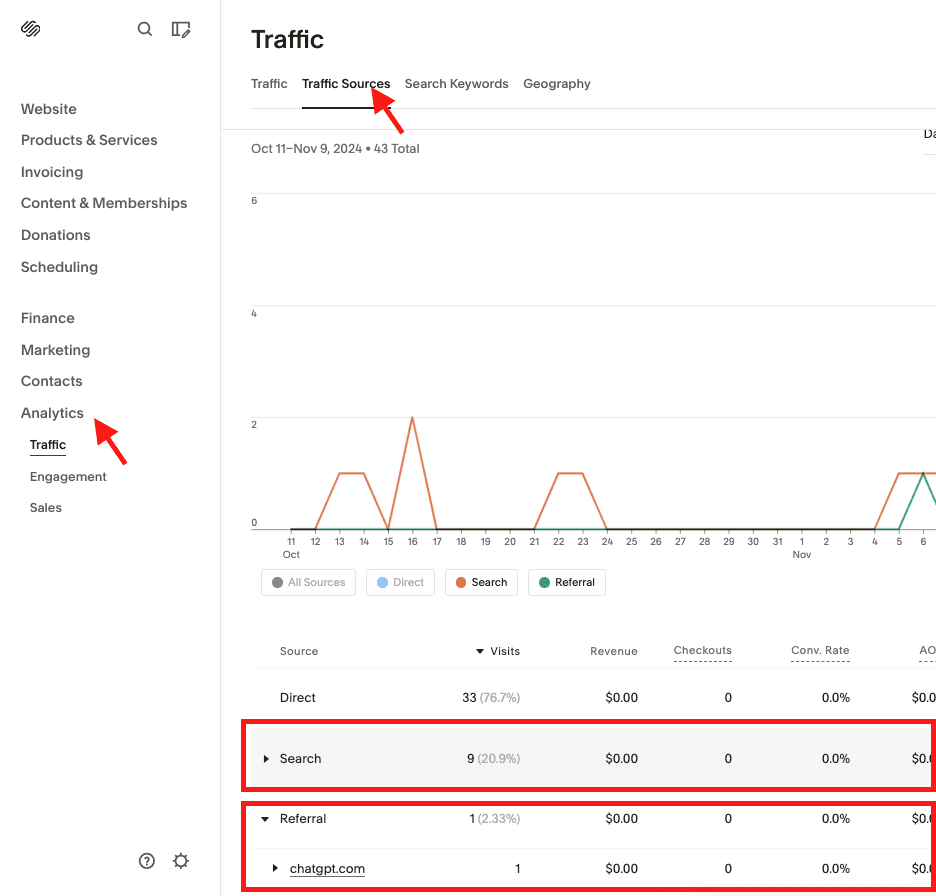

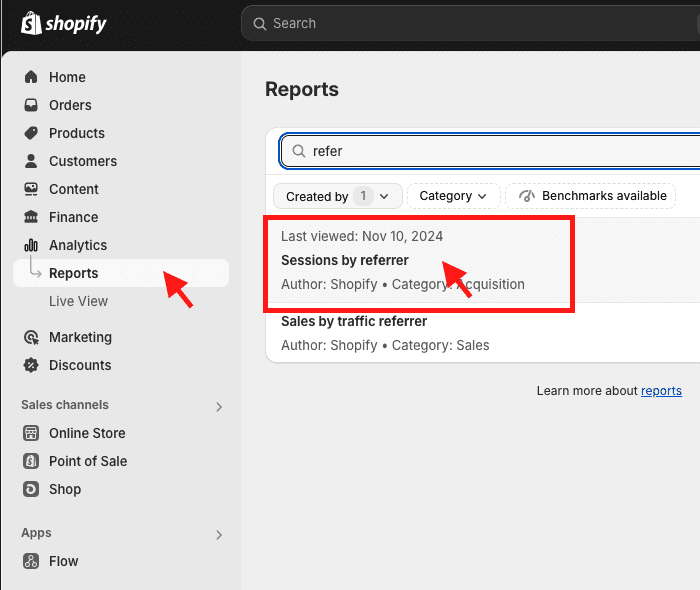

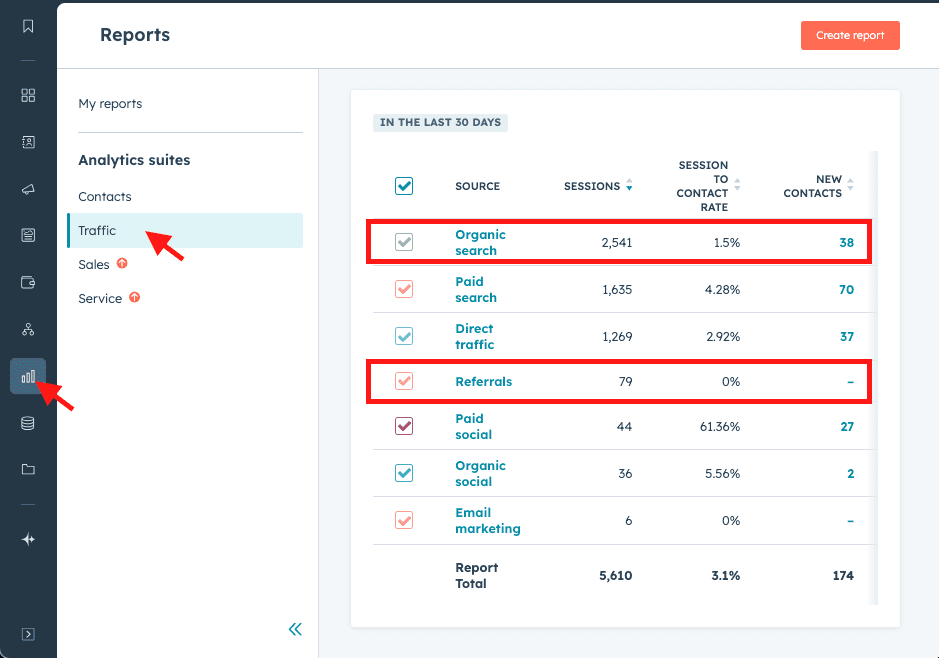

I’ve seen the impact in real-time with clients and through conversations with other marketing professionals, especially SEOs. Dramatic decreases in organic search traffic, like 50% decreases year over year, are not uncommon. This trend suggests that relying soley on traditional SEO tactics is no longer sufficient.

In response, major trends are emerging:

- Learning and implementing different measurement metrics

- Earning the trust of real people

- Connecting by actually knowing and meeting customer/community needs and wants

Unfortunately, there’s growing concern that Google is clamping down on the concept of the open web. With zero-click searches and even removing complete websites from their search pages, they’re affecting the livelihoods of content creators and businesses. The ethical implications are concerning at best.

How should we respond to this? What’s the approach if these are indeed possible realities of the technological giants?

For starters, I’m seeing talk around building brand awareness and trust rather than working to rank content around related topics and themes.

I see potential positive impact from creating your own ecosystem of community. It’s still about quality over quantity, even though we’re seeing and experiencing more and more quantity over quality. It doesn’t mean it’s the most effective approach.

We could adapt by focusing on building direct relationships with our audience, diversifying our digital presence, and emphasizing authentic engagement over reliance on traditional SEO tactics alone. Exploring alternative platforms and creating unique, high-quality content can help mitigate the impact of changes imposed by major tech companies.

For me, it’s still about responding with an approach by humans and for humans.

Are people visiting websites less?

It’s a valid concern. With the rise of social media networks and community platforms like Discord, Reddit, and Substack, there’s a noticeable shift in where people seek information and engage with content. While managing an inbound marketing website has been the norm, considered The Foundation of your online presence, this standard is evolving.

Websites still allow people to dive deeper into your offerings, take action, and interact with you. They’re also where other platforms direct interested users. However, recognizing that search engines are not the only places people go is crucial. Adapting involves embracing other platforms where your audience spends time and ensuring you’re present and active there.

We’re going to cover some long-held best practices that will have you covered, no matter what, and ensure you’re set up well for the evolving shifts within the search industry.

What’s Still Important on Websites?

Even if people are beginning to explore external areas for your content and community, websites remain a crucial element of your online presence. At least for today.

I’ve intentionally left out some of the tried and true SEO techniques for the different technical and content aspects of optimizing for search engines (i.e., Technical and On-Page SEO). My philosophy is to create for people first, and then search engines and crawlers ideally would follow suit. And, that may not be the case. Sometimes they do. Sometimes they don’t.

What matters more than how I optimize page titles for SEO? Ensuring the content is already high-quality, informative, helps, and serves its purpose well. I need to make sure that my content is easy to understand and well laid out on the places where I manage digital content. I also want to make it easier for various technologies to semantically understand content without having to read through it all.

Allowing or Disallowing AI Bots

Now, if you do not want AI bots to crawl and scrape your content and websites, I recommend that you block them in your robots.txt file for site-wide directives and use meta-robots tags for individual pages or sections that you want to block from crawling and scraping technologies. For instance, The New York Times updated their robots.txt file to block most AI bots, citing concerns over content usage without proper compensation.

There are implications for allowing and disallowing bots and crawlers. I see it in two ways:

- Protecting Your Content: Allowing search engines and AI technologies might lead to them potentially stealing your content.

- Limiting Visibility: Blocking bots might limit your potential to show up for your customers. I believe it’s important to ensure you allow the scraping of your sites, at least for products and services. The same goes if you have one or more locations

Here’s an example of a robots.txt file that blocks some bots and not others:

# Block OpenAI's GPTBot

User-agent: GPTBot

Disallow: /

# Block OpenAI's User Bot used by plugins

User-agent: GPTBot-User

Disallow: /

# Block Google’s Generative AI bots

User-agent: Google-Extended

Disallow: /

# Block Perplexity AI's Crawler on a section and allow on another

User-agent: PerplexityBot

Allow: /directory-1/

Disallow: /directory-2/

# Allow all other bots full access

User-agent: *

Disallow:

Manage your crawler directives based on your own discretion. Let’s talk about what else you can do to make sure you’re focusing on impactful digital marketing efforts.

Creating High-Quality Tailored Content

High-quality content remains the cornerstone of engaging your audience. In this section, we’ll discuss what constitutes exceptional content and how tailoring it to specific platforms can enhance engagement and reach.

Well-written, high-quality content is key. But, what exactly does that entail? It requires:

- Originality

- Genuine value for your audience

- Sufficient depth and substance

- Prioritizing quality over quantity

- Being informed and honest

Understanding when to offer high-level, easy-to-understand pieces versus deeper, more advanced content is crucial. Tailoring your content to each platform enhances engagement. For instance:

- Provide long-form written content to your literary audiences on platforms like Substack.

- Offer quick tips and insights on visual platforms like Instagram or TikTok.

By meeting your audience where they are and delivering content in the format they prefer, you increase the likelihood of meaningful engagement.

Standing Out in the Age of AI

AI technologies have made accessing general information easier than ever, often directly within search results or through chatbots. This shift means that to capture your audience’s attention, you must hone in on the specific problems your customers face and address them uniquely. It’s more important than ever to differentiate your content. Here, we’ll explore strategies to highlight your unique value proposition and connect with your audience on a deeper level.

Ask yourself:

- Why would someone choose you over another?

- What’s your unique perspective and experience?

- How do you stand out and why?

By focusing on your unique experiences and the real pain points of your audience, you provide value that generic AI-generated content cannot. An essential touch for resonating with those who need what you offer.

What unique experiences and trends can you point out that truly resonate with your audience? Think about their real challenges. Addressing these pain points helps you tailor your offerings to meet their needs effectively.

Sharing relatable stories or examples that show how your expertise solves these issues builds trust. It demonstrates you understand their struggles on a deeper level, something generic AI-generated content often misses.

We need content that doesn’t just inform but truly engages. Provide value by tackling problems AI can’t solve (yet) or delivering insights with the nuance only a human can offer. This approach keeps your audience interested and sets you apart.

We also want to ask, What do people value? I follow the Marketing Accountability Council (MAC) and Jay Mendel, the founder posted a recently mentioned an article that I thought was very insightful into a more human approach to marketing. The BBC put together research around understanding their audiences values. They identified 14 core values that are underpinned by psychological needs based around developing human values tools that have a human focus. These include how people value achieving goals, belonging to a group, expression, having autonomy, receiving recognition, and others. I encourage you to review it. The concept here is not to take advantage or tool. But to understand. To listen. To connect.

Enhancing User Experience to Engage Audiences

User experience (UX) plays a pivotal role in retaining and engaging your audience. This section delves into key aspects of UX design that make your content more accessible and enjoyable across different platforms. Good design involves:

- Formatting and spacing your content

- Ensuring no obstacles or unwanted distractions

- Adapting to the media’s formatting, length, and appropriate depth

Ease of use extends to how your content appears on other platforms. Similarly, Structured Data Markup (covered below) plays a vital role in how technologies understand your content. By categorizing your content effectively, you make it easier for search engines and AI technologies to grasp what you’re offering, giving you an edge over others.

My philosophy is to make it easy and effortless for people to consume our creations. People are best served when we provide straightforward ways to interact with our digital stuff. If you need people to pay you to access it, give them a sample and then provide easy and effortless ways to pay for it. It’s empathy in design that doesn’t ignore or neglect the places that people go often.

UX is also the concept behind how some websites place “Sign Up” front and center and keep “Login” hidden away within some navigational element. The sign-up is a bright color button, and the login link is a non-assuming text link that’s harder to locate.

This tells me that these businesses are:

- Sales/exponential growth focused

- Not paying customer focused

I see you, and I wish you’d just make your login links easier to get to, and with a secondary color that’s not as bright. A contrasting color with your website theme works great. But please, at least make it a button.

Improving user experience is just one side of the coin. Ensuring that search engines and AI can effectively interpret your content is equally important. This is where structured data markup comes into play.

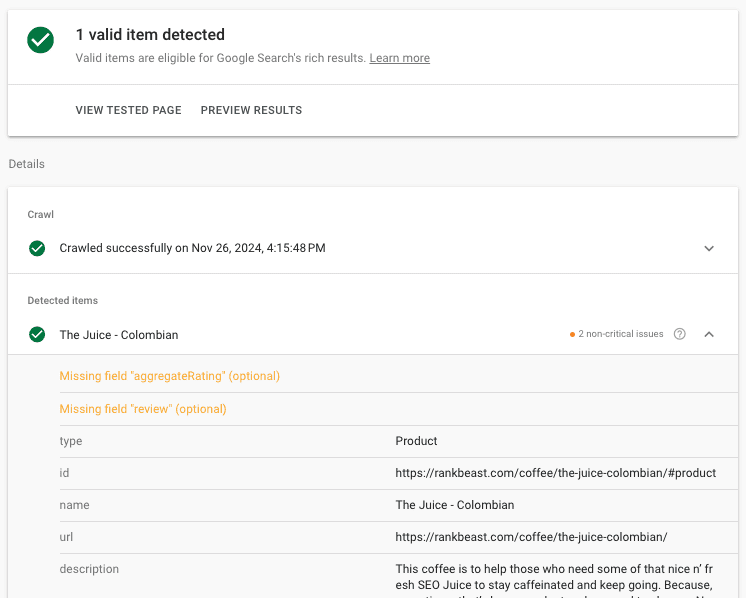

Leveraging Structured Data Markup for Better Visibility

To improve how search engines and AI understand your content, implementing structured data markup is essential. We’ll explain what schema markup is and how it can boost your content’s visibility in search results.

Structured data markup (or Schema markup) is a universally agreed-upon set of simple code that comes in a variety of types. Put simply, schema markup is extra code that easily categorizes the type of content on any given page.

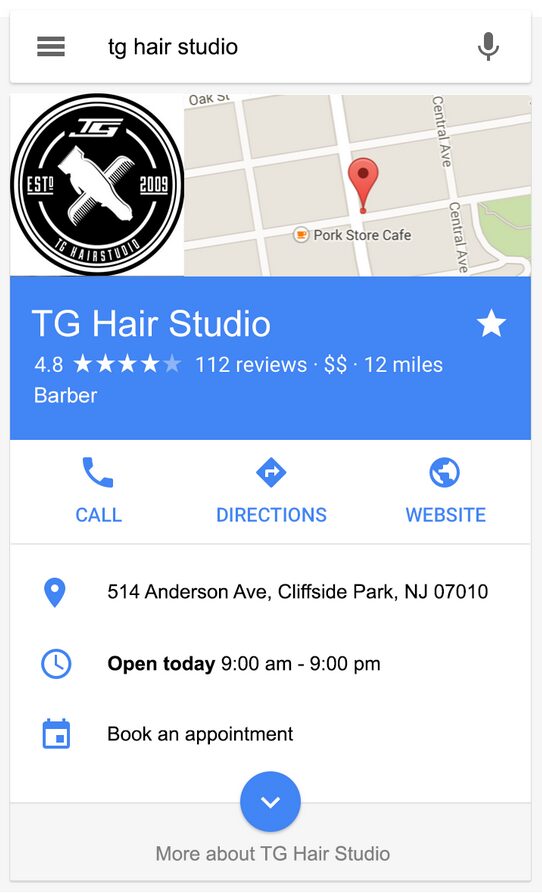

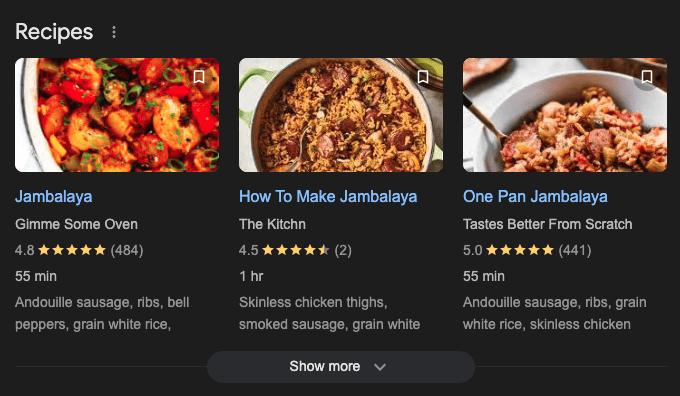

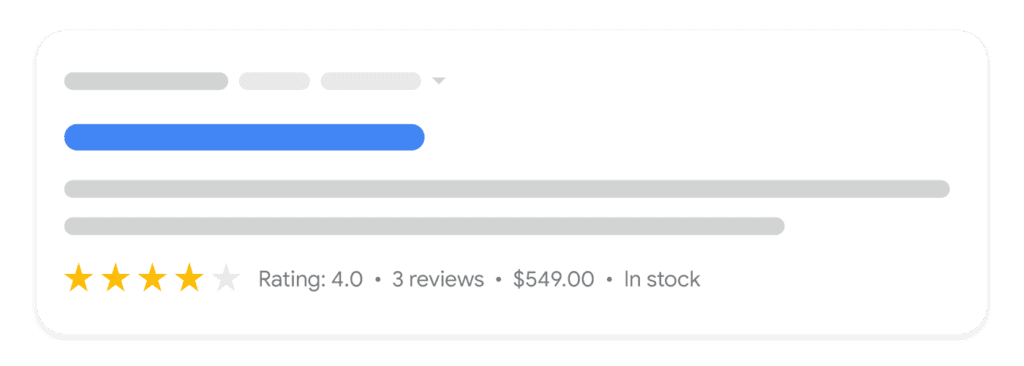

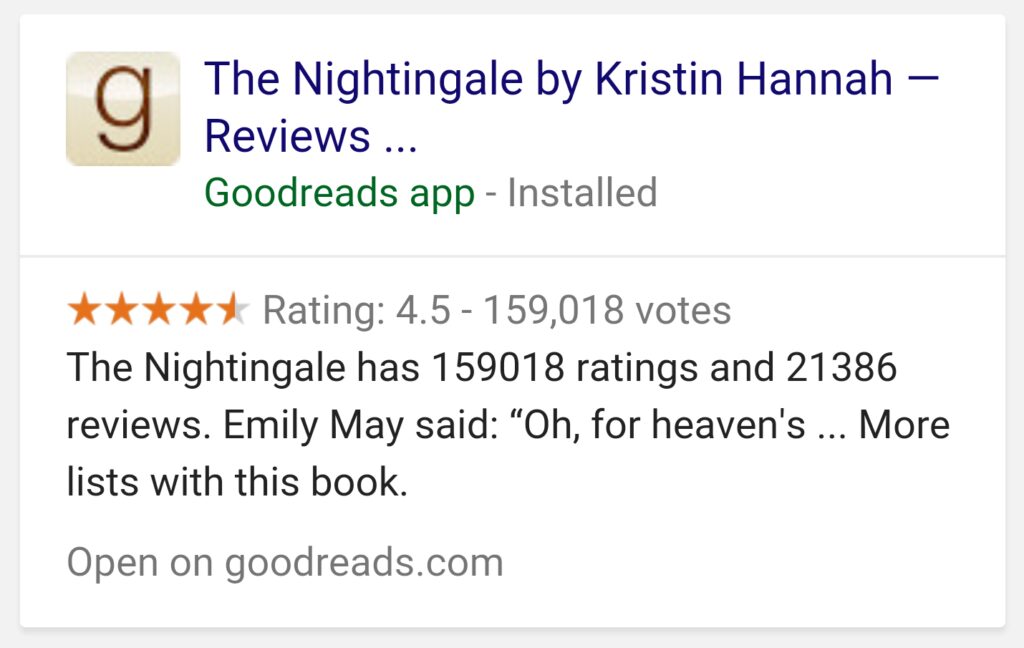

The most commonly used markup types include:

- Local Business

- Articles

- FAQs

- Recipes

- Videos

- Calendar events

Schema markup exists so that advanced crawling technologies quickly understand what your content is at a high level without needing to analyze the content itself. It gives your content a leg up, making it easier for technologies like Google and other emerging crawlers to quickly grasp what your stuff is about.

So, if you have an FAQ section or blogs with mini FAQ sections, you can collaborate with ChatGPT on this one:

- Throw that information into ChatGPT.

- Let it know what schema type it is.

- Have it output JSON code that you’ll put within the body HTML of any given page.

You can even paste in the copy text and have it evaluate which types it can provide. Just double-check everything any AI tool outputs. As we all know, it’s prone to make errors. As well as hallucinate. Or create time-traveling bot assassins.

Creating schema JSON code with GPT is actually really straightforward once you get the gist of it, and it’s good practice to make sure to update this code as your content changes. You don’t want it to mismatch. If you have an automated tool that does this automatically, great. If not, keep your eye on any updates that you need to make to the JSON code too when you update any page with schema markup already on it.

At this point, it’s clear that while general content may hold less importance on websites, the real focus should be on the value you provide. Communicating the value through your services, products, or expertise. What truly matters is how you stand out in your field and communicate your unique perspective.

You’ll still need to establish authority and credibility. Evidence of your expertise, like testimonials, case studies, or a strong reputation, remains essential. At the same time, ensuring technologies like AI and search engines understand what you offer is equally important.

Schema markup plays a key role here. By categorizing your content effectively, it allows these technologies to interpret your offerings more quickly and accurately—giving you a clear advantage over competitors who neglect it.

What About the Other Places People Are Going To?

As I mentioned before, we may be living in a real-time shift, observing changes in where people go to find things, learn things, buy things, see things… you get the idea. There’s a real possibility that this will impact your inbound marketing website. People may visit your website less overtime and begin to go to other sites instead to engage with you, like social media networks, community platforms (like Discord, Reddit, or Substack).

So, what are areas of focus to ensure you’re engaging with people where they are when it’s not on your website? Focus on platforms where your audience spends time, such as social media networks, podcasts, or community forums. Tailor your content to suit each platform’s format and audience preferences, and engage actively by responding to comments, participating in discussions, and providing valuable insights.

Create Multimedia Content & Distribution Across Different Platforms

Producing high-quality content is essential, but just as important is ensuring it provides genuine value and connects with your audience. A smart approach to content marketing involves repurposing your ideas into different formats and distributing them across platforms where you audience spends time.

Diversifying content types not only broadens your reach but also engages people in ways they prefer to consume information. Here are some strategies for tailoring multimedia content to different audiences and platforms:

- Creating a video for your blog article: When repurposing a blog post into a YouTube video, outline the main points as a script, add visual elements, and include captions for accessibility.

- Having a podcast interview about one of your main areas of expertise: When participating in a podcast interview, prepare key talking points and real-life examples that highlight your expertise. Engage in a conversational tone to make the discussion lively and relatable. After the episode airs, share it on your platforms and encourage your audience to listen and share their thoughts.

- Writing a blog about the visualization you created: If you’ve developed a unique data visualization, write a blog post explaining the insights it reveals, the tools you used, and the process behind creating it. Include screenshots or interactive elements so readers can engage directly with the visualization. This not only showcases your expertise but also provides valuable learning for your audience.

- Creating short snippets of quick takeaways for Instagram and TikTok: Transform key points from your content into engaging short videos for Instagram Reels or TikTok. Use eye-catching visuals and concise messaging to capture attention within the first few seconds. Incorporate trending music or sounds to increase visibility, and add captions or text overlays to make your content accessible to all viewers.

Catered to each. Designed for the format that engages best with each platform.

Your people are in some, not all, and they probably behave a little differently on places like Instagram compared to Reddit. As you can imagine, this can look quite daunting for content creators or even business owners like me.

I recommend taking it one step at a time and starting somewhere. Do not think you can do it all or should do it all. There are specific areas where you will shine and others where the audience just won’t respond well. This is part of the dance.

It’s important to track your engagement metrics to ensure you’re putting your time investments where it’s at least building the engagement and visibility that you need. Ideally, you have tracking into revenue metrics that are tested and free of bugs or errors.

I also recommend not doing what everyone else is doing. Especially when we’re talking about engagement and visitor metrics. The ultimate goal here is to engage and connect with your customers. So, this is part of doing the things that work best for you that work best with your audiences. Not to do everything everywhere all-the-time.

You don’t need super short content or super long content. The metrics and people will tell you that you need to have more and more. It’s the way of technology. It’s never full. Always hungry.

So, create what works for you and then diversify the content types. Explore. Hell, have fun with it. Whatever that means for you.

Community Engagement

Building and participating in online communities fosters trust and establishes you as an authority in your field. We’ll explore effective ways to engage with communities authentically and build lasting relationships. Your presence in these spaces must provide genuine value, establishing yourself as trusted and helpful. Here’s the hard thing for businesses to understand:

Communities do not want promotional content. They’re tired of it.

They value genuine interaction over visibility, and building trust requires a long-term commitment.

Focus on contributing meaningful insights and engaging in conversations that matter to the community. Answer questions, share your expertise, and be there to help without the expectation of receiving anything in return. Participating in the community is about building relationships, not pushing products or services. While many still attempt these tactics, people have become wary of them. Such approaches are often off-putting and can even repel potential clients. Let your audience speak well of you.

Authenticity goes a long way, and people can absolutely tell when you’re genuinely interested versus just looking to make a sale.

You might consider creating your own community spaces by:

- Starting a discussion group

- Hosting webinars

- Launching a newsletter

- Creating a Discord server

In essence, these provide a platform for others to share and engage to build and connect with community. It takes time, patience, and a willingness to embrace the process with imperfections and all.

Here are examples of community platforms and what engagement or management can look like for each. I want to re-emphasize that offering legitimate value and engaging with people is the goal. Not to overtly sell your stuff. There’s implications for each if you do. Likely, banning and losing trust of your customers and audiences:

- Substack – Create a newsletter where subscribers can comment on your posts and engage in discussions. Engage with readers by replying to comments, asking for feedback, and hosting subscriber-only Q&A sessions.

- Discord – Build a Discord server dedicated to your niche where members can chat in real-time. Moderate channels, organize events like live discussions or AMAs (Ask Me Anything), and foster a welcoming environment.

- Subreddit – Participate in or create a subreddit focused on your industry where people share content, ask questions, and discuss relevant topics. Contribute to threads by answering questions, posting informative content, and upholding community guidelines.

- Community Features on a Website – Implement forums or comment sections on your website where visitors can start discussions and interact with each other. Initiate topics, respond to posts, moderate comments to maintain respectful dialogue, and provide helpful resources.

Optimizing for AI Search and Overviews: Strategies to Increase Your Content’s Reach

It’s important to optimize your content for people, and also consider how AI configures information. No matter if it’s on your website, social media, community forums, or content-sharing sites. AI-powered search tools like Google’s AI Overviews (AIO), ChatGPT Search, and other large language models pull information from across the internet to answer people’s questions. They crawl blogs, social media posts, forums, and other online content to find the best answers.

Imagine you’re a fitness coach. By structuring your workout tips in clear headings and bullet points, AI tools can easily extract and present your content to users asking for fitness advice.

In this strategy, we want to increase the likelihood that AI systems will feature your content when people seek information related to your expertise. Keep in mind the need for differentiation and building trust/authority. Also, that high-level information that AI provides is best left to them.

So, how do you optimize for AI search?

The shift to people turning to AI-driven search engines means your content needs to be structured in a way that AI systems can easily process, understand, and deliver to people. But with all things SEO… don’t force it. It’ll be too obvious.

Here are some tried and true techniques:

- Clearly Written Content: Write in straightforward language. Incorporate concise and informative paragraphs that directly answer specific questions. On the other hand, In-depth, accurate information increases the authoritative signals that people and technology value.

- Answer Questions: Address specific questions and weave them naturally into your content. Think about what your audience is asking and provide clear answers. AI bots often pull information from these sources to respond to people’s queries.

- Format Content: Use clear headings, subheadings, bullet points, and lists to organize your content. Break up the text so it’s visually appealing and doesn’t all run together. Incorporate transcripts for videos. Add alt-text to images. This makes it easier for AI models to understand, increasing the likelihood that your content will appear in AI Overviews (AIO).

- Naturally Incorporate Relevant Phrases: Aka, keyword research. Check to see what people are actually searching for by using tools like Google Search Console, analyzing Google’s own results, seeing how others are targeting these phrases, and utilizing SEO tools. Then, naturally incorporate these phrases into your content. It doesn’t have to be an exact match. Remember, your creations are for people. We work to match around what they’re actually searching for rather than what we think they’re searching for.

- Do Not Keyword Stuff: For the love of God, please resist the urge to overload your content with keywords. Overusing keywords is lazy, and it’s really off-putting. Focus on writing naturally and ensure targeted keywords serve the content and the reader.

You’re not only optimizing for AI search and established search engines but enhancing the overall quality and accessibility of your content. The goal is to make your content valuable for people. AI and search engines are said to be designed to recognize and promote content that best serves people’s needs. And they sometimes don’t. So, priority is people.

Embracing the Evolution of SEO and Digital Marketing

The landscape of digital marketing is shifting beneath our feet. While traditional SEO tactics are evolving, the core principle remains: connecting and building relationships with your customers. Your website is still a crucial hub, but it’s essential to meet your audience where they are. Even if it’s on social media, community platforms, or AI-driven tools.

Focus on:

- Offering genuine value without constant selling

- Building trust over time

- Leveraging platforms that align with your strengths

As technology advances, the fundamental need for authentic connection and valuable information stays the same. Embrace change with curiosity and flexibility, and continue creating content that resonates and fosters community.