The Holy Canon of Search Optimization (SEO)

Behold! These are the SEO tactics that have achieved the long-coveted sainthood. The high priests of the super-secret society convene every fiscal year (Gregorian calendar, naturally…) to decide which chapters of SEO stay, are added, or are removed from the Canon of search scripture. (BELL RINGS) As we bow our heads in reverence, we open our hearts, our ears, and our hands to the good news of the high and almighty one.

Alas! The horrors of those with dry bones and evil doers may rest in the SEO Cemetery. For they belong there. Yet our arms remain open to all, even to those who dare return from the hellish depths of the damned.

Blessed be the tactics that are ethical and all-good, lest one curses the name of the people. WE CAST THEM OUT! To HADES, the evil-doers go! The path of the righteous is narrow, while the path of darkness is quick and manipulative. Beware the tempting corpses of undead tactics, they may briefly satisfy your cravings, but they will leave you wanting and empty.

Hear these words! The long game is tried and true. Awareness of the changes is good and Holy. The path of response and adaptation is the will of the high one. Amen.

Digital marketing is evolving rapidly with the introduction of AI tools and features. Where and how people search online depends on the tools available to them, and businesses rely on certain predictable behaviors. Think about how you open up your phone, tablet or laptop. How do you and others search, shop, learn, and connect? In the early days of the internet, search engines like AltaVista, Ask Jeeves (my ’90s favorite), and Yahoo helped people navigate the web. Then Google emerged in the 2000s, quickly dominating and defining the search market while others like Yahoo and Bing adapted as well.

As search engines became the go-to tools for navigating the web, social media entered the scene and introduced new layers to the digital reality. People began using these platforms in specific and predictable ways, and before long, these spaces became marketplaces for selling visible real estate to businesses (hello, ads). This shift defined how people spent their time online as they worked, shopped, connected, learned, and entertained themselves. It also established the standard for how companies marketed to consumers.

For years, this has been the status quo. Until now.

I am fascinated by change. As a naturally curious person, I find myself drawn to how people react to and resist change. I notice the fear of change and the projections of what we think it will bring, especially when we fear it. Today, we stand at the edge of major transformations. New tools and platforms are emerging, and existing juggernauts like Google are evolving in unprecedented ways. These shifts are disrupting long-held marketing tactics, especially those tied to high-level, simple informational content. People are adapting. Their search behaviors are changing. Where they go and how they use digital tools is evolving right now.

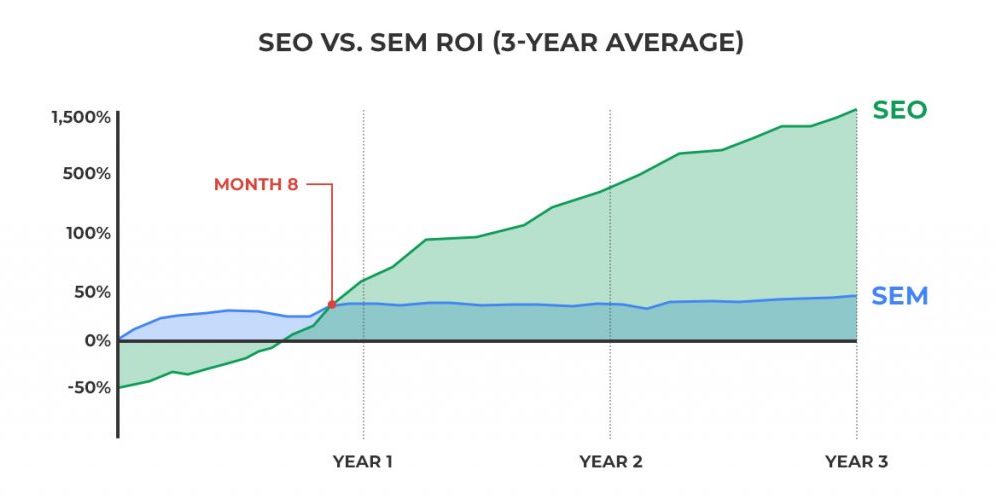

AI and large language models are reshaping how people interact with information. These technologies dynamically generate generalized content, offering direct answers and summaries without the need to visit a traditional website. Informational content, once the backbone of search strategy and rankings, is now being upended. This is causing a decrease in organic traffic across industries and sparking widespread concern. Despite this, I believe certain SEO strategies remain effective. Websites still hold value because people continue to search across AI platforms, search engines, social media, and their own communities and forums.

I pay close attention to where we are in real time. What has changed? What no longer works? What still holds its ground? All of this depends on industry, service, product, offering, movement, and audience. People in one category may search differently than others.

SEO, or as I prefer to call it, Search Optimization, is about showing up in the places people naturally go to search. The tactics I outline here remain relevant because they are rooted in creating value, serving real needs, and adapting to the tools people choose to use. These strategies are impactful today and positioned to evolve with the changing digital landscape. Some may eventually fall away, while others will continue to thrive. New approaches may emerge, and even those buried in the SEO Cemetery might rise again. GASP!

I like observing these changes as they happen and helping people navigate this moment with a clear understanding of how it impacts human behavior and the way we search.

Now, before diving into the tactics of Search Optimization, let’s focus on what makes them effective. A strong and high-quality content strategy.

Prerequisite: Content Strategy and High-Quality Content

Before we dive into optimization tactics, it is essential to start with a solid foundation. A content strategy that aligns with your goals and high-quality content that delivers for-reals value is the key to success. High-quality content is not just about writing; it is about solving problems, answering the questions AI tools don’t handle well, and connecting with your audience on a human level.

Without this groundwork, even the best marketing tactics fall flat. Think of it as building a house. Without a strong foundation, everything else crumbles. Great content gives you something to optimize, link to, and rank with. More importantly, it provides something real and unique, an antidote to the cookie-cutter, AI-generated fluff that dominates so many spaces today.

Rank Beast’s Approach to Content Strategy

I begin by understanding who you are speaking to, what they care about, and how they search. This is the blueprint for creating content that is not just optimized, but impactful and built to last. It’s about going beyond answering “what” and tapping into the “why” and “how” that truly resonate with your audience.

The Role of Content Marketing in SEO

It’s important to recognize that SEO and content marketing work hand-in-hand together. High-quality, audience-focused content drives long-term results by meeting people’s expectations when they search and giving them exactly what they need in a way that feels real and relevant.

The role of content is shifting. AI can churn out generalized answers, but people still crave depth, connection, and expertise. Strategies like creating evergreen content, refreshing older resources, and adapting content for different platforms amplify your digital marketing efforts. By focusing on these areas, you stand out in a sea of sameness and build trust with your audience.

By combining great content with relevant Search Optimization tactics, you create a powerful strategy that connects with your audience, adapts to changing technology, and ensures your digital presence is future-proof.

Now, let’s dive into the practical side of Search Optimization. Below are the tactics I still believe in, SEO strategies that have proven their worth and continue to adapt as the digital landscape shifts. These are the approaches I use to ensure you aren’t just keeping up with change but staying ahead of it. They focus on creating meaningful connections, showing up where it matters most, and standing out in an increasingly automated low-quality world.

On-Page/Your Website

Meta-Data Optimization

Meta-Data is what people see on search results pages, AI-driven search, and social media previews. Meta-data refers to two key tags in the HTML header called page-titles and meta-descriptions. A page-title is often the first thing a searcher sees when looking something up. The description text is displayed below the title and offers a quick summary of what the page is about.

- Why it’s relevant: Meta-data helps people and technologies like AI tools understand what a page is about at the most basic level. This foundational clarity ensures your content is discoverable and accurately represented, even as search behavior and tools continue to evolve.

- Rank Beast’s approach: Meta-data is more than just keywords and summaries. It is about crafting concise and meaningful descriptions that represent exactly what the page is about. My focus is on ensuring accuracy and clarity.

- Value: Well-crafted meta-data allows technologies to understand your content quickly and efficiently without processing the full page. This ensures your content is properly represented across platforms like AI-driven search summaries and social media previews, improving its visibility and maintaining its integrity.

Keyword/Search Term/Topical Research

Researching topics and terms that people search for to align your content with the language your audience uses.

- Why it’s relevant: Search engines and technologies now understand meaning beyond exact words. Research ensures your content reflects the language people naturally use while addressing specific needs AI-generated answers may overlook. This alignment helps your content stand out and meet expectations in a world shaped by evolving search tools.

- Rank Beast’s approach: I focus on uncovering the intent behind searches and aligning content with what matters most to people. It is not just about finding keywords but creating content that resonates and remains semantically clear.

- Value: Thorough research ensures your content is authentic and trustworthy, standing out even as AI tools generate more generalized content. By reflecting shared language and real-world relevance, your content connects with people and maintains visibility without over-reliance on outdated keyword strategies.

Header Optimization

Headers are the titles, headings, and subheadings that structure your visible content into clear, digestible sections. Each header serves as a description of what’s below it, acting as a roadmap for people and the technologies that help them discover content.

- Why it’s relevant: Clear headers make it easier for people to scan your content and understand its flow, while signaling structure and meaning to technologies, including search engines, AI tools, and other platforms. Structured headers are essential for helping these technologies quickly interpret and represent your content accurately, keeping it accessible and useful in an evolving digital landscape.

- Rank Beast’s approach: I see headers as more than just markers. They are compact summaries that reflect what is coming next while aligning naturally with the language people use. My focus is on creating headers that improve clarity, reinforce your content’s purpose, and ensure it is easy for both people and technologies across platforms to follow and understand.

- Value: Thoughtfully crafted headers improve the experience for people by keeping them engaged and guiding them through your content. They also help technologies, from search engines to AI-driven tools, understand your content’s purpose and context, ensuring it is properly represented wherever it is discovered. By prioritizing people in your content structure, you build stronger visibility and engagement across the digital ecosystem.

Image Alt Text

Alt text is the written description you assign to images, giving context for accessibility tools and technologies that process visual content.

- Why it’s relevant: Alt text makes your content more accessible for people using screen readers and helps technologies interpret images more efficiently. While tools are improving their ability to “see” images, providing accurate alt text ensures technologies require fewer resources to understand what your visuals represent. This added clarity supports better content discovery across platforms, from AI-driven tools to social media and beyond.

- Rank Beast’s approach: I write alt text that is practical and clear, focusing on descriptions that help people first while improving accessibility. My approach ensures that alt text provides meaningful context for technologies without adding unnecessary complexity or stuffing keywords. It is about connecting visuals with purpose, making your content more inclusive and functional.

- Value: Thoughtfully crafted alt text bridges the gap between visual content and the technologies that interpret it. This enhances accessibility for people and ensures your visuals are properly understood in platforms beyond search engines, including AI tools and social media.

Internal Linking

Internal linking refers to links on one page of your site that connect to other pages on the same site, typically as linked text in paragraph copy. These links help people and technologies navigate your content and explore related information seamlessly.

- Why it’s relevant: Internal links improve navigation for people, creating a smoother and more intuitive experience. They also help technologies like AI tools and search engines understand how your pages relate to each other.

- Rank Beast’s approach: I use internal links to guide people naturally through your content, adding them only where they provide meaningful context or lead to the next step. My focus is on making these links purposeful and seamless, avoiding overuse.

- Value: Thoughtful internal linking keeps people engaged by providing clear pathways to explore your site, making it easier for them to find what they need. This approach ensures your content remains easy to navigate.

Technical/Your Website

As search behaviors evolve, the role of websites may shift. If people rely less on traditional websites, these strategies might diminish in importance. However, for industries and audiences where websites remain a key touchpoint, these technical optimizations are vital.

No Broken Links

Broken links are links on your site that lead to error pages or non-existent content. They stop people in their tracks, disrupting their experience and making your site feel outdated or unreliable.

- Why it’s relevant: Broken links frustrate people, leading to a poor browsing experience. They also harm crawl efficiency for search engines, AI-driven tools, and other technologies that process your site. A poorly maintained site can impact visibility and trustworthiness. Properly fixing these links with 301 redirects or updating them to point to active pages ensures your site remains functional and trustworthy.

- Rank Beast’s approach: I regularly audit for broken links, focusing on redirect hygiene. This includes setting up only the redirects you need, updating outdated links, and avoiding chains or loops that slow navigation. My goal is to create a seamless experience for people while ensuring search engines and other technologies can navigate your site effectively.

- Value: A site free of broken links builds trust by offering a smooth browsing experience and a sense of reliability. It ensures search engines and technologies can efficiently crawl your site, keeping your content discoverable and accessible while supporting long-term visibility.

XML Sitemap Hygiene

An XML sitemap is a file on your site that lists all the pages you want people and technologies to find. It helps guide search engines and other tools to the content you want people to discover.

- Why it’s relevant: A sitemap tells search engines and technologies which pages on your site you want them to access and present to people. It also provides context to your site’s structure.

- Rank Beast’s approach: I address common sitemap issues by removing errors, redirects, and non-indexable pages from the file. I ensure the sitemap is clean and follows best practices so search engines and other technologies can efficiently crawl and process your site.

- Value: A well-maintained sitemap ensures your most important content is easy to find and access. This supports visibility on search engines, helps technologies navigate your site, and makes it simpler for people to locate the information they’re searching for.

Easy-to-Understand and Navigate Site Architecture

Site architecture refers to the navigation menus at the top of your website, internal links (discussed earlier) within page content, and organized logical categories that guide people to content in different places throughout your website. For example, grouping useful tools like calculators, downloadable templates, or educational guides under a “Resources” section helps visitors find specific information or solutions quickly. A well-structured site feels intuitive and keeps people moving naturally through your pages.

- Why it’s relevant: When your site is well-organized, people can quickly find what they need without frustration. A clear hierarchy, starting with high-level pages that lead to more specific content, helps people understand and navigate your site with ease. For search engines and other technologies, this structure highlights how pages are connected.

- Rank Beast’s approach: I design site structures that feel effortless. This means grouping content logically, creating simple and clear navigation menus, and using internal links to guide people naturally to related information. My goal is to create a site that is intuitive for people and easily understood by search engines and other technologies.

- Value: A well-structured site provides a seamless experience, helping people find what they need quickly and efficiently. This also ensures technologies can process and prioritize your content effectively, maintaining your visibility across platforms.

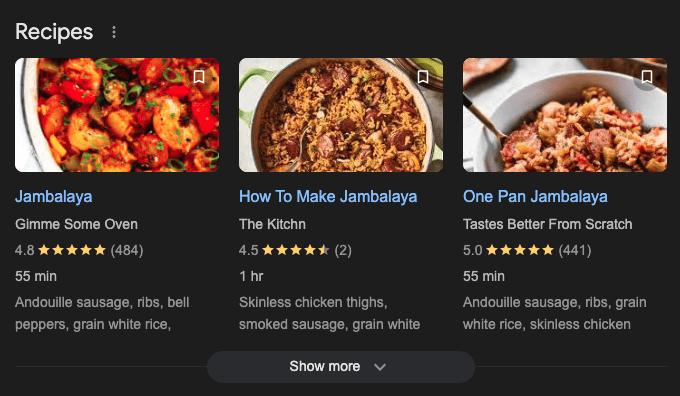

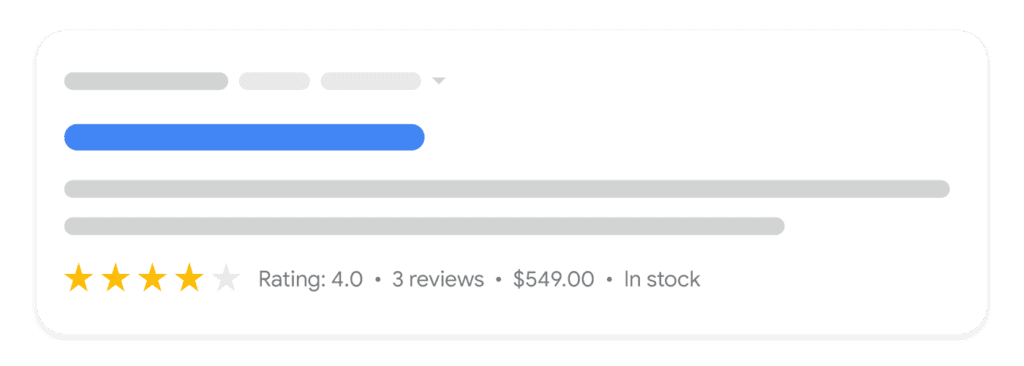

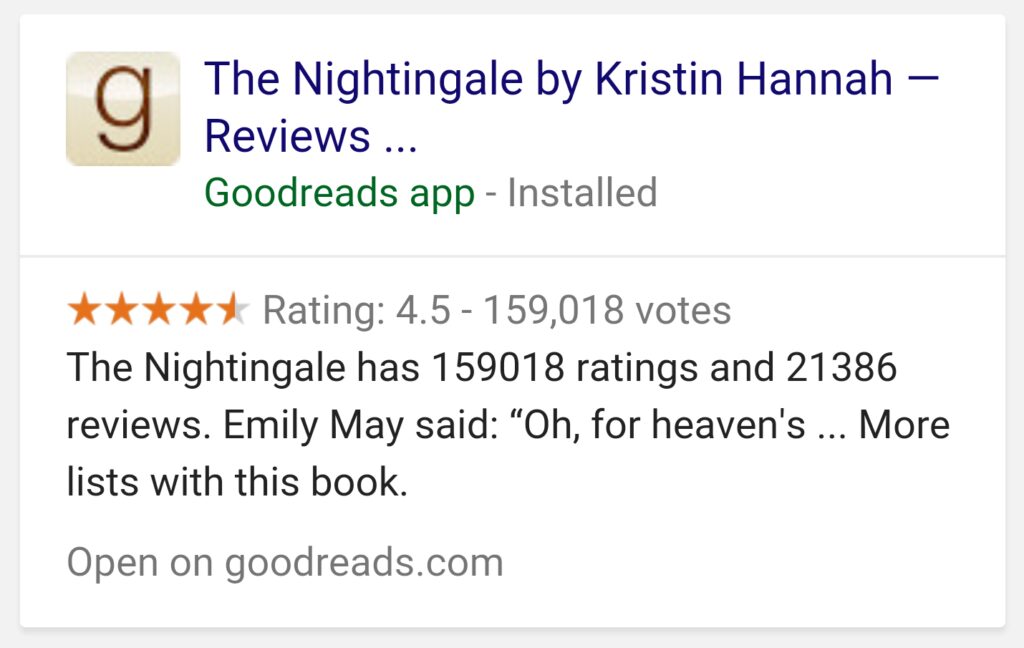

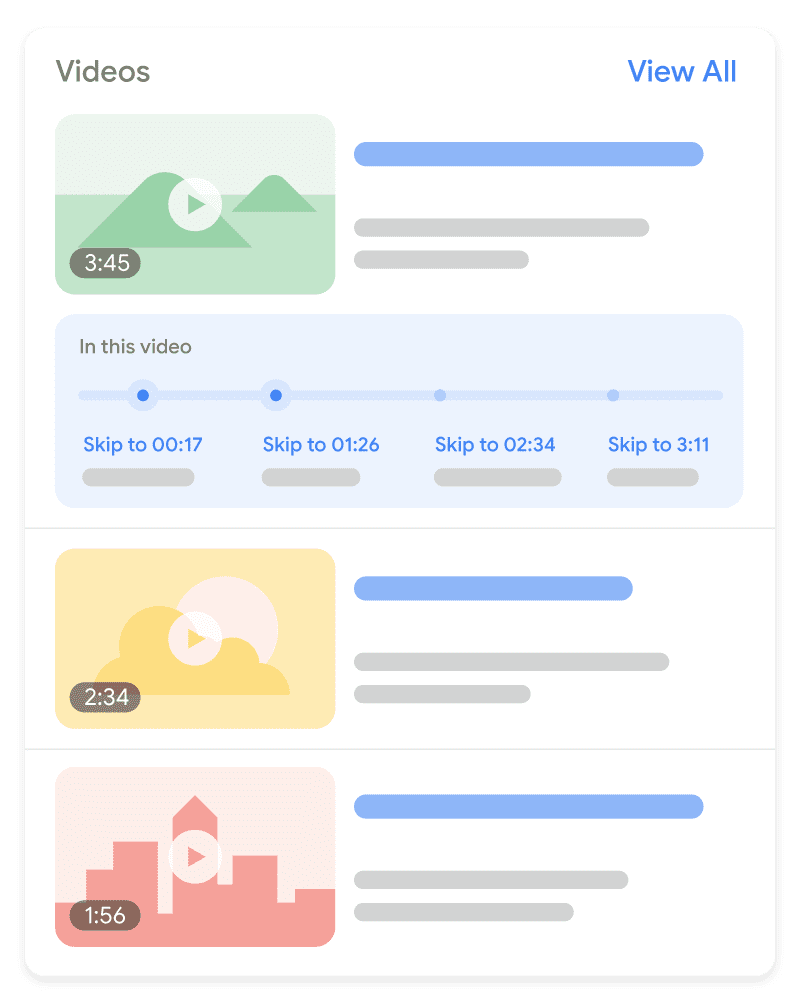

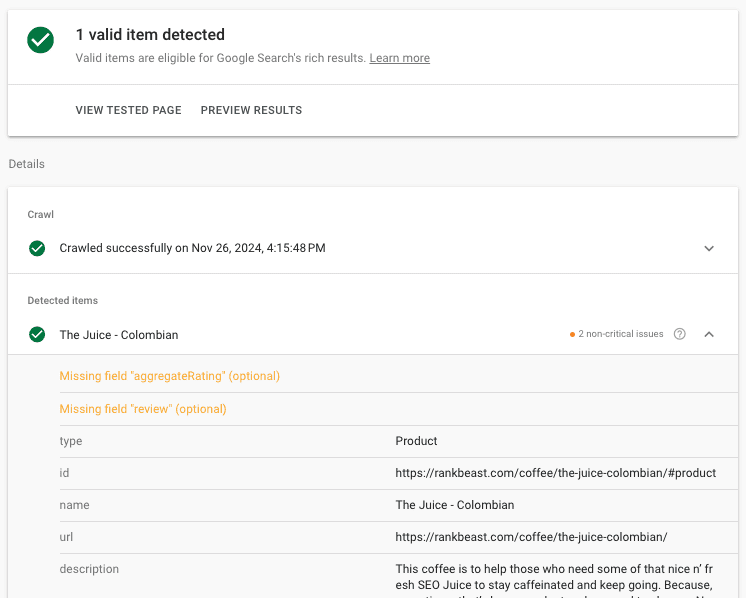

Schema Markup

Schema markup is code added to your website that helps search engines and technologies better understand your content. It identifies specific details, such as FAQs, reviews, or organization information, without requiring these tools to analyze the entire page.

- Why it’s relevant: Schema helps search engines and AI-powered tools process your content quickly and accurately, providing structured data that can be used in a variety of ways. While search engines often display enhanced features like star ratings or event details in results, schema also supports other technologies by offering clear and organized information. This makes your content easier to integrate and utilize across platforms as technology evolves.

- Rank Beast’s approach: I prioritize schema markup because it helps technologies process your content without needing to analyze entire pages. Structured data is a powerful asset that cuts to the chase, enabling search engines and AI tools to quickly and accurately understand what your content is about. By implementing a clear and efficient schema, I ensure the most important details of your site are highlighted, increasing the chances of your content being presented to people across platforms.

- Value: Schema markup ensures your content is properly represented and engaging, whether it’s on search engines or AI-powered platforms. This tactic keeps your site relevant, discoverable, and competitive as search behavior and technologies evolve.

Image Compression

Image compression reduces the file size of images on your site without losing noticeable quality. Smaller image files load faster, helping your site remain quick and visually appealing across all devices.

- Why it’s relevant: Faster-loading pages improve the experience for people, especially on mobile devices where slower connections can lead to frustration. Search engines also consider page speed an important factor for rankings, meaning compressed images help your site perform better by reducing load times while maintaining high visual quality.

- Rank Beast’s approach: I focus on optimizing images for speed without sacrificing quality. This includes using advanced compression tools or manually resizing files when necessary to achieve the best results. My approach balances maintaining high visual standards with ensuring fast load times across devices.

- Value: Compressed images improve page speed, creating a smoother experience for people and supporting visibility on search engines. This ensures your site is efficient, user-friendly, and capable of meeting modern digital expectations.

Mobile-First

Mobile-first design means your website is built and optimized for mobile devices first (smartphones), then adapted for larger screens like desktops and tablets. This approach ensures your site works well on the devices most people use to browse online.

- Why it’s relevant: Mobile browsing dominates, but not all audiences rely on mobile equally. Some, like older demographics or professionals at work, may use desktops more. Analytics and data determine how much focus your audience places on desktop or tablet browsing. Search engines prioritize mobile usability with mobile-first indexing, so mobile design is essential, but the overall design should match how your audience interacts with your site.

- Rank Beast’s approach: I prioritize mobile-first design to ensure your site works seamlessly on smaller screens, but I also consider your audience’s specific browsing habits. If your data shows that desktops or tablets are more commonly used, I adapt the design to prioritize those devices while maintaining mobile usability. My focus is on creating a site that delivers an exceptional experience across all devices, tailored to the behavior of your audience.

- Value: Mobile-first design improves usability, aligns with search engine priorities, and creates a seamless experience that matches how your audience browses online.

Page Speed Optimization

Page speed optimization focuses on making your website load faster by improving how quickly content appears on your site. This includes optimizing images, streamlining code, and enhancing server performance to create a fast and responsive experience.

- Why it’s relevant: A fast-loading site is a good experience for people. Long load times frustrate visitors and often cause them to leave before they even see your content. While search engines use page speed as a ranking signal, the real priority is ensuring your site feels seamless and effortless to use.

- Rank Beast’s approach: I focus on practical changes that improve speed and usability. This includes running manual checks, compressing images, reducing unnecessary code, configuring speed plug-ins, and optimizing server response times. I also rely on dedicated WordPress hosting instead of shared hosting to provide consistent, fast performance. By using high-quality server configurations, I ensure every WordPress site I manage delivers the speed your audience expects.

- Value: Faster page speeds improve usability, keep people engaged, and enhance your site’s overall performance. A fast-loading site not only ranks better in search results but also leaves a lasting positive impression on your audience.

HTTPS for Real Security

HTTPS (Hypertext Transfer Protocol Secure) encrypts the connection between your website and its visitors, providing a secure browsing experience. While it’s essential for sites handling sensitive data, like passwords or payment information, it’s also critical for building trust and meeting modern browser and search engine expectations.

- Why it’s relevant: Even if your site doesn’t handle sensitive data, HTTPS is still important. Visitors expect the padlock icon, and modern browsers warn people when a site isn’t secure. Search engines also consider HTTPS a ranking signal, meaning not having it can harm your visibility and credibility. Improper HTTPS setups can lead to search engines clustering your site with problematic pages, making it harder to fix and reindex later.

- Rank Beast’s approach: I go beyond just enabling HTTPS and displaying the padlock icon. My process includes checking for expired certificates, mixed content errors, and vulnerabilities that could impact your site’s security or credibility. I focus on delivering true protection for your site, ensuring it is trusted by both people and search engines alike.

- Value: A properly implemented HTTPS setup builds trust, protects your site’s credibility, and enhances visibility. Addressing vulnerabilities proactively ensures your site offers a secure and reliable experience, keeping visitors confident and engaged.

Off-Page

While your website serves as the foundation of your online presence, the digital world extends far beyond it. Off-page strategies shape how people discover and engage with your brand across search results, platforms, and communities. These efforts not only build trust and boost visibility but also create meaningful connections with your audience where they already are.

Treating the Branded SERP as a Homepage

The branded SERP (Search Engine Results Page) is what people see when they search for your business or brand name. It’s often the first thing potential customers interact with, making it a critical part of your online presence.

- Why it’s relevant: When people search for your brand, they’re looking for confirmation of credibility and quick access to information about you. If your branded SERP is unorganized, filled with irrelevant results, or overshadowed by competitors or unrelated brands, it can confuse visitors and erode trust. A polished branded SERP builds confidence and helps people find what they need easily.

- Rank Beast’s approach: I help you take ownership of your branded SERP by optimizing your website, claiming profiles, and ensuring consistent information across platforms. My focus is on presenting your brand as professional, trustworthy, and easy to engage with, no matter where people find you.

- Value: A well-managed branded SERP gives people confidence in your business, strengthens trust, and ensures they can easily access accurate and engaging information about your brand.

*Reputation Management

Reputation management is about understanding and addressing how people judge your online brand. This involves evaluating and managing reviews, mentions, and other factors that shape trust and credibility.

- Why it’s relevant: People trust what others say about your brand, through word-of-mouth and online reviews. Positive feedback and mentions help build trust, while negative or unmanaged feedback without thoughtful responses can push people away. A strong online reputation sends a clear signal of trust and makes it easier for people to choose your business over competitors.

- Rank Beast’s approach: While I don’t offer reputation response services, it’s for maintaining trust and credibility online. I focus on optimizing branded search results, showing you where there’s room for improvement, and foundational elements that support your broader reputation efforts.

- Value: Actively managing your reputation enhances trust, strengthens your brand’s visibility, and makes a strong impression on anyone researching your business.

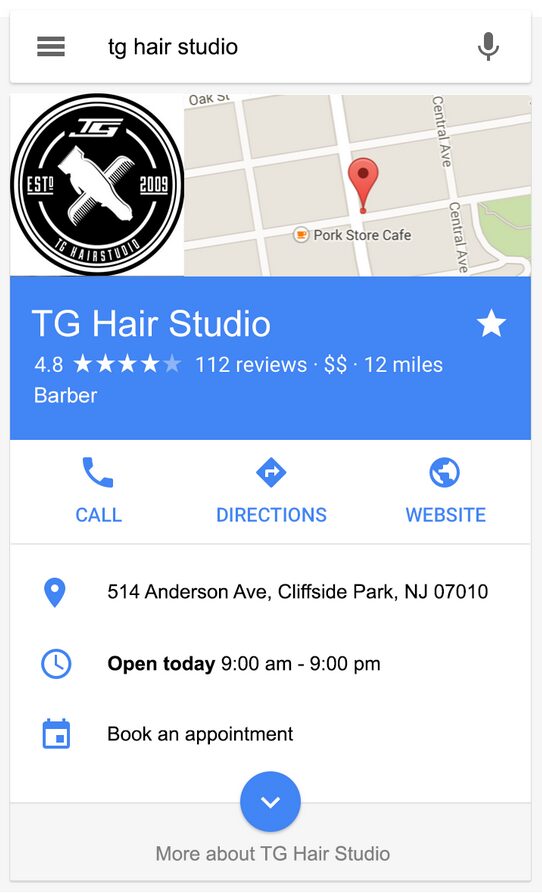

Online Listings/Business Profiles

Online listings and business profiles, like Google My Business, display key information about your business, such as your name, address, phone number (NAP), hours, and reviews. They make it easy for people to find you and learn what they need at a glance.

- Why it’s relevant: Accurate and consistent business profiles make it easy for people to find, connect with, and learn more about your business. For local searches, people really on consistent NAP information across platforms so that they see valid information in commonly searched areas of the web. This improves your visibility in local searches and ensures your business is easy to find.

- Rank Beast’s approach: I prioritize accuracy and consistency across all platforms where your business appears. By aligning your NAP details and maintaining complete, up-to-date profiles, I help establish trust with both people and search engines. My goal is to ensure your business is professional, easy to find, and optimized to stand out in local searches.

- Value: Accurate, well-maintained listings build credibility across the web, making it easy for people to find up-to-date information about your business, from directions and hours to reviews.

Social Profile Optimization

Social profile optimization involves configuring your brand’s profiles across social media platforms. This includes consistent branding, accurate information, and clear descriptions that reflect your business.

- Why it’s relevant: Social media profiles often appear in search results and serve as key touchpoints for people discovering your business. They are more than just a place for information. They provide an opportunity for people to engage with your content and connect with your active social strategies. Optimized profiles ensure your brand is consistent and recognizable across platforms, enhancing visibility and guiding people to important destinations like your website.

- Rank Beast’s approach: I focus on creating brand cohesion across all social profiles by aligning logos, descriptions, contact details, and links. By maintaining consistency, I ensure your profiles reflect a professional and trustworthy presence that supports your overall branding efforts.

- Value: Optimized social profiles build trust and make it easy for people to engage with your content. They also support your broader strategies, like brand visibility, contributing to a cohesive online presence that enhances your marketing efforts.

*PR and Backlinking

PR-driven backlinking earns links from high-quality, relevant websites through valuable content and strong relationships. These links often come from media coverage like news articles or blog features, which help build your backlink profile, a collection of links pointing to your site that search engines use to rank your content. While traditional PR focuses on media placements and brand awareness, backlinks are ideally a natural result of these efforts.

- Why it’s relevant: Media placements in reputable sources often include backlinks that signal trust and authority to search engines. These links improve your visibility and strengthen your online presence. High-quality backlinks from trusted sources drive sustainable growth, but many still rely on low-quality practices like buying links or sending excessive outreach to irrelevant websites. While these tactics might promise short-term gains, they often lead to penalties, damage your reputation, and undermine long-term SEO efforts. I see this approach as unethical and a waste of time. Aligning PR with backlinking ensures you avoid these risks while maximizing both branding and SEO benefits.

- Rank Beast’s approach: While I don’t offer PR or backlinking services, they are vital to SEO. I recommend working with professionals who prioritize authentic relationships and meaningful placements over quick, low-quality tactics.

- Value: High-quality PR and backlinking enhance trust, strengthen your site’s authority, and improve search engine rankings. They also create lasting benefits by building a credible online presence that engages your audience and avoids the pitfalls of low-quality techniques.

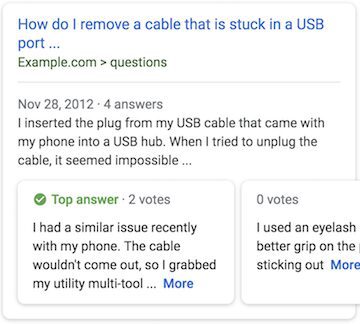

Building and Supporting Online Communities

Leveraging communities means participating in online platforms like Reddit, Quora, and niche forums to build visibility and engagement. It’s about joining conversations and contributing value by answering questions, sharing insights, or discussing relevant topics. Like… Actual value. Authentic, human-to-human interactions.

- Why it’s relevant: These platforms are where people go to ask questions, seek advice, and explore topics they care about. Engaging authentically positions you or your brand as a trusted resource, while overly self-promotional tactics can harm credibility, alienate people, and even violate platform rules. Genuine, value-driven participation fosters trust, builds relationships, and increases visibility over time.

- Rank Beast’s approach: Success in online communities comes from a human-centered approach. It’s about understanding the culture of each platform, respecting its guidelines, and contributing in ways that are genuinely helpful. I recommend focusing on answering questions, sharing knowledge, and offering real value to discussions while avoiding promotional tactics that can backfire.

- Value: Thoughtful participation in communities strengthens your reputation, expands your reach, and builds meaningful connections with your audience. This is not about quick wins but about creating a foundation of trust and visibility that supports long-term growth.