What You Need to Know About Google’s Indexing Reports and Crawl Efficiency

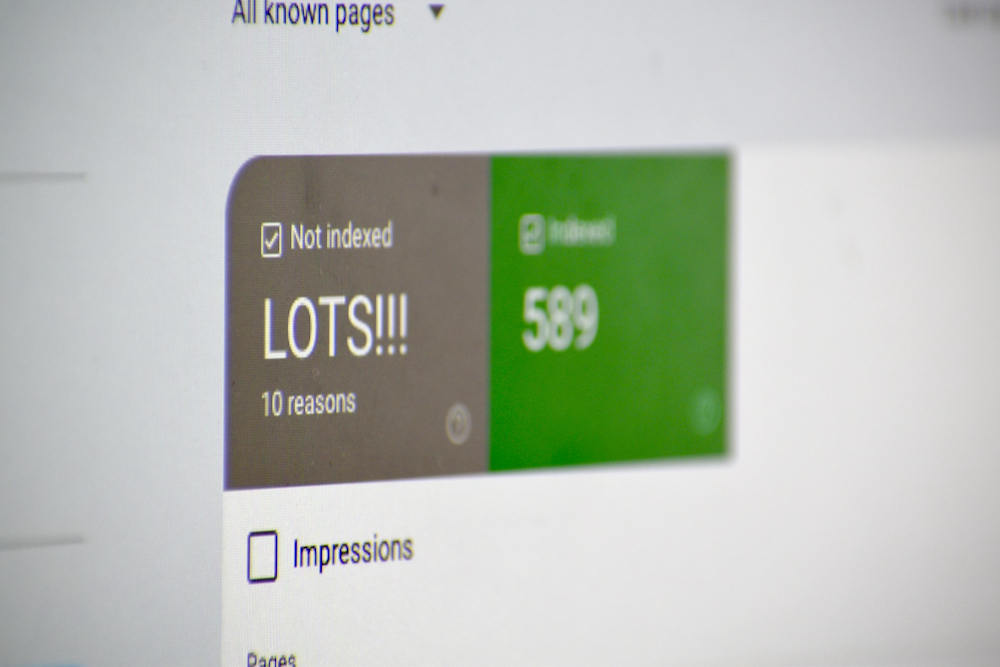

I recently had someone come to me, a bit worried. Their Google Search Console (GSC) account reported a ton of “Not Indexed” URLs, and they were concerned about their website’s online visibility on search engines. Were they missing out on traffic? Were important pages not showing up in search?

It is a valid concern. Seeing thousands of pages flagged as “Not Indexed” can make any site owner uneasy. But does this actually mean fewer people will find their content?

In this article, I will break down what “Not Indexed” really means in this particular scenario. I will also clear up common misconceptions.

Why “Not Indexed” Pages Seem Like a Big Deal

Their concern was totally valid. Thousands of pages flagged as “Not Indexed” in GSC raised important questions. Why were so many pages left out of Google’s index? Was their website’s visibility at risk?

It is reasonable to assume this is harmful, which is exactly what my client was worried about. They were concerned that a high “Not Indexed” count meant Google was ignoring their pages or that something was wrong with their site’s structure. But before jumping ahead of myself, I needed to dig in and see what was actually going on.

What “Not Indexed” Actually Means (And Why It’s Often Misunderstood)

Google Search Console’s “Not Indexed” status does not always mean something is wrong. It simply means Google is aware of these pages but has chosen not to include them in its index. Most of the time, this is intentional and works to your advantage.

What GSC “Not Indexed” Represents

There are plenty of valid reasons why pages are excluded from the index:

- Redirects guide users and search engines to the correct page. This ensures that only the final destination URL is indexed and not the URL that redirects users to the destination.

- Noindex tags intentionally tell Google not to include certain pages, such as admin login screens or thank-you pages. This is exactly what you want when setting noindex directives in your robots.txt file or meta tags.

- Canonicalized pages consolidate ranking signals to a single, preferred version of duplicate content. This keeps your site organized and efficient.

- 404 pages, tracking URLs, and low-value system pages are filtered out because they add no real value to users or search engines.

For instance, if you see a URL with tracking parameters like ?utm_source=email in the “Not Indexed” report, that is perfectly fine. Google is already indexing the primary version of that page instead.

The Two Most Popular “Not Indexed” Categories

When searching for answers online about GSC’s “Not Indexed” reports, these two categories come up frequently. And for good reason:

- Crawled – Currently Not Indexed

These are pages Google has crawled but decided not to index. This often happens because the pages are thin on content, duplicate others, or simply do not provide enough value to rank in search results.- Example: A blog post that repeats information found on another page might fall into this bucket.

- Discovered – Currently Not Indexed

These are pages Google knows about but has not crawled yet. This is usually due to crawl budget limitations or because other pages on the site are prioritized.- Example: An e-commerce site with thousands of paginated product pages might see many URLs in this category, as Google focuses on more important pages first.

Does a High “Not Indexed” Count Send Negative Signals to Google?

Short answer: No. A high “Not Indexed” count does not hurt rankings or send negative signals to Google. Its system is designed to sort URLs into appropriate categories and filter out those that don’t belong in the index. It’s actually a good sign that Google is organizing your site efficiently.

That said, the real question isn’t about the total count, it’s about whether any important pages are being left out of the index when they shouldn’t be. If valuable pages like cornerstone blog posts or product pages show up in the “Not Indexed” report, that’s when you need to investigate.

What Really Matters: Prioritizing the Right Pages for Indexing

The key isn’t to focus on the total number of “Not Indexed” pages but to ensure Google is indexing the ones that matter most. Here’s what to prioritize:

- Index the right pages: Focus on pages that provide unique value, like cornerstone content, high-traffic blog posts, or important product pages. These are the pages you want people to find in search.

- Clean up GSC reports: Use tools like robots.txt or noindex tags to ensure irrelevant URLs, like tracking or system-generated pages, are categorized properly in the “Not Indexed” section. This helps you better understand how Google is handling your site and makes ongoing management more efficient.

- Improve crawl efficiency: Ensure Google prioritizes crawling valuable, indexable pages by refining your robots.txt file, setting appropriate directives, and minimizing unnecessary system-generated or low-value URLs that waste crawl resources.

This sets the stage for Google to focus its resources on indexing the pages that truly matter, making sure the most valuable content gets the attention it deserves.

Why Addressing Crawl Directives and Canonicals for SEO and GSC Matters

When Google crawls a website, it does not index everything it finds. Instead, it processes and categorizes URLs based on how relevant and valuable they seem. This is where crawl directives and canonicalization play a critical role. They help control how Google interacts with a site’s pages, ensuring that its resources are spent on what truly matters.

Beyond just search engines, these directives help technologies such as AI models, content aggregators, and other web-based systems understand how to interact with a website. Robots.txt signals which pages are worth crawling, while canonical tags help determine the right version of a page.

As AI-driven search evolves, ensuring that the right content is indexed will be even more important. Whether a page appears in traditional search results, AI-generated answers, or content discovery engines, properly structuring crawl directives ensures long-term visibility.

By optimizing crawl directives, we can:

- Prevent Google from wasting crawl budget on tracking URLs or system-generated pages.

- Make it easier for Google to prioritize important content.

- Ensure that reports in GSC accurately reflect the pages that should be indexed.

Canonical tags, on the other hand, help consolidate duplicate or similar content, so Google understands which version of a page should be indexed and ranked. Without proper canonicalization, search engines might split ranking signals across multiple URLs or index pages that are not intended to be found in search.

What to Focus on When You See ‘Not Indexed’

Not every “Not Indexed” URL is a problem. Seeing a high number of pages in this category can be alarming at first, but the key is understanding which URLs are being left out and why. Some should be indexed. Others are better off ignored. Here is how to tell the difference.

When to Worry

A “Not Indexed” report is worth investigating if:

- Key pages are missing. If blog posts, product pages, or service pages that should be discoverable in search are not being indexed, that is a sign something may be wrong.

- Crawl budget is being wasted. If Google is spending time crawling duplicate or unnecessary pages instead of focusing on important content, it could be hurting overall indexing efficiency.

In both cases, the solution is not to get every page indexed but to guide Google toward indexing the right ones.

When NOT to Worry

Not all “Not Indexed” pages are cause for concern. These exclusions are part of how Google maintains an efficient index and don’t indicate a problem. It is completely normal to see:

- Redirected pages. Google will exclude pages that 301 or 302 redirect to another location because the final URL is the one that matters.

- System-generated URLs. Internal search results pages, tracking links, and session-based URLs often appear here. These do not belong in the index anyway.

- Canonicalized duplicates. If a page properly points to another as the canonical version, Google will index the preferred URL while leaving the duplicate out.

These exclusions help Google maintain a clean and efficient index. If these types of URLs are appearing in your report, there is nothing to fix.

What to Focus On

Instead of worrying about the total “Not Indexed” count, the goal is to make sure Google is prioritizing the pages that actually matter.

- Prioritize indexing for valuable pages. Blog posts, landing pages, and service pages that drive traffic should be indexed and crawlable. Use tools like Screaming Frog and GSC’s URL Inspection tool to identify any important pages that are missing.

- Ensure Google is focusing on content that matters. If a site has too many low-value URLs cluttering up crawl activity, work on refining robots.txt directives, canonicalization, or internal linking to direct Google toward high-value content.

A high “Not Indexed” count is not inherently bad. The real question is whether Google is skipping pages that should be indexed or just filtering out the ones that do not belong in search. Understanding this difference is what actually matters.